TABLE OF CONTENTS

NVIDIA H100 SXM On-Demand

Machine Learning relies heavily on neural networks - computing systems loosely inspired by the human brain. Neural networks depend on training data to learn and improve their accuracy over time. However, once these learning algorithms are fine-tuned for accuracy, they are powerful tools in artificial intelligence, allowing us to classify and cluster data at a high velocity.

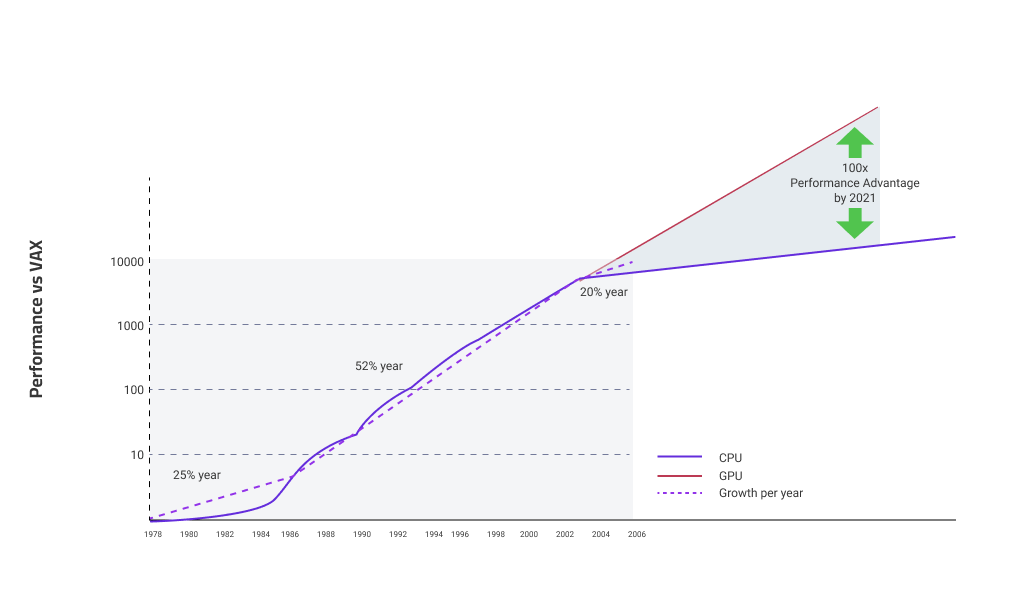

Unfortunately, traditional CPUs severely limit this computational capacity. Even the fastest CPUs only contain up to 24 cores optimised for sequential processing. GPUs, on the other hand, promise to accelerate AI/ML innovations. To give you an idea, NVIDIA's flagship Ampere Architecture A100 GPU packs 54 billion transistors optimised for parallel processing, offering up to 20X higher performance than the prior generation.

Such high-performing GPUs play an important role in AI/ML breakthroughs across healthcare, scientific research, finance, transportation, and more.

How are GPUs Used in AI?

AI GPUs can speed up deep neural network training with power efficiency, availability in data centres/cloud and affordability. Here’s how the widespread adoption of GPU acceleration is helping AI's recent breakthroughs.

Why are GPUs used for AI

AI applications, especially those based on deep learning, require the processing of large datasets and the execution of complex mathematical operations. The parallel processing capabilities of GPUs make them ideal for these tasks. Unlike Central Processing Units (CPUs), which are designed to handle a few complex tasks in sequence, GPUs can handle thousands of simpler, parallel tasks simultaneously. This is crucial for training deep learning models, where millions of parameters need to be adjusted iteratively, and for inference, where these models make predictions based on new data.

GPU-Accelerated Applications in AI

-

Image and Speech Recognition: In image recognition, deep learning models like Convolutional Neural Networks (CNNs) are used to identify and classify objects within images. GPUs accelerate the training of these models by efficiently handling the vast number of computations required for processing large image datasets. Similarly, in speech recognition, models such as Recurrent Neural Networks (RNNs) benefit from GPU acceleration, allowing for real-time speech-to-text conversion.

-

Natural Language Processing (NLP): Applications such as machine translation, sentiment analysis, and text generation rely on models like Transformers and Bidirectional Encoder Representations from Transformers (BERT). These models, due to their complex architecture, demand high computational power, which GPUs provide, thereby reducing training time from weeks to days.

-

Autonomous Vehicles: Self-driving cars use AI models to interpret sensor data, make decisions, and learn from new scenarios. GPUs are crucial in processing the massive amount of data generated by the vehicle's sensors in real-time, ensuring quick and accurate decision-making.

Accelerating GPU for AI Training

The training phase of deep learning models is a resource-intensive task. GPU for AI Training accelerates this process by parallelising the computations. For instance, in a neural network, the weights and biases are updated after each batch of data is processed. GPUs enable the simultaneous processing of these batches, significantly speeding up the training.

During inference, when a trained model is used to make predictions, speed is often critical, especially in applications like real-time language translation or autonomous driving. GPUs enhance the efficiency of these operations, allowing for faster, more accurate responses.

AI Frameworks and Libraries Optimised for GPUs

Frameworks like TensorFlow and PyTorch have been optimised to take full advantage of GPU capabilities. These frameworks include support for GPU-based operations, allowing developers to focus on designing models rather than worrying about hardware optimisations. CUDA (Compute Unified Device Architecture), developed by NVIDIA, is a parallel computing platform and API model that enables dramatic increases in computing performance by harnessing the power of GPUs. Libraries such as cuDNN (CUDA Deep Neural Network) provide GPU-accelerated primitives for deep neural networks, further enhancing performance.

Machine Learning GPUs

With parallel architecture boasting thousands of cores for simultaneous floating point operations, GPUs can accelerate training machine learning models and statistical analysis on big data by orders of magnitude. Here’s how GPUs for machine learning are helping produce top-class innovations:

Statistical modelling

Statistical modelling, a cornerstone of machine learning, often involves complex computations like matrix multiplications and transformations. GPU accelerated machine learning is highly efficient in handling these operations due to their parallel processing capabilities. This efficiency is particularly noticeable in deep learning, a subset of ML that relies on large neural networks, which inherently involve extensive matrix operations.

Data Analysis

GPU accelerated machine learning accelerates data processing tasks, including sorting, filtering, and aggregating large datasets. This capability is vital in exploratory data analytics, a critical step in ML where insights and patterns are derived from data. The parallel processing power of GPUs allows for handling large volumes of data more efficiently than traditional CPUs.

Faster Training and Testing of ML Models

-

Parallel Processing Architecture: The architecture of a GPU, characterised by hundreds of small, efficient cores, is designed for parallel processing. This contrasts with the few, highly complex cores of a CPU. GPUS for machine learning algorithms can perform many operations simultaneously, significantly speeding up the training and testing of models.

-

Improved Accuracy and Performance: The speed of GPUs doesn't just make ML processes faster; it also improves their performance. Faster computations allow for more iterations and experiments with models, leading to more finely tuned and accurate models. The ability to process large datasets in a shorter time frame means models can be trained on more data, generally leading to better performance.

-

Deep Learning Advancements: Deep learning models, particularly those with many layers (deep neural networks), greatly benefit from the parallel processing capabilities of GPUs. These models require extensive computational resources for tasks like backpropagation and gradient descent during training. GPUs significantly reduce the time required for these processes, making the development and training of deep neural networks feasible and more efficient.

GPU-Accelerated Applications in ML

-

Personalised Content: Streaming services and e-commerce platforms use recommender systems to suggest personalised content and products. GPUs accelerate the processing of large datasets from user interactions, enabling real-time recommendations.

-

Complex Algorithms: Advanced algorithms used in recommender systems, such as collaborative filtering and deep learning, are computationally intensive. GPUs provide the necessary power to run these algorithms effectively.

-

Real-time Processing: Financial institutions employ ML for fraud detection, requiring the analysis of vast amounts of transaction data in real-time. GPUs make it possible to process and analyse this data swiftly, detecting fraudulent activities more quickly.

-

Pattern Recognition: GPUs aid in the complex pattern recognition tasks involved in identifying fraudulent transactions, which often require the analysis of subtle and complex patterns in large datasets.

-

Climate modelling: GPUs are used in climate modelling, helping scientists run complex simulations more rapidly. This speed is crucial for studying climate change scenarios and making timely predictions.

-

Molecular Dynamics: In drug discovery and molecular biology, GPUs facilitate the simulation of molecular dynamics, allowing for faster and more accurate modelling of molecular interactions.

-

Real-time Data Processing: GPUs are essential in processing vast amounts of data from sensors in real time, a critical requirement for autonomous driving systems.

-

Computer Vision and Decision Making: Tasks like image recognition, object detection, and decision-making in autonomous vehicles heavily rely on deep learning models, which are efficiently trained and run on GPUs.

-

Language Models Training: Training large language models, like those used in translation services and voice assistants, requires significant computational power, readily provided by GPUs.

- Real-time Interaction: GPUs enable real-time processing capabilities needed for interactive NLP applications, such as chatbots and real-time translation services.

The Future of GPU with AI and ML

According to a report by MarketsandMarkets, the GPU market, valued at USD 14.3 billion in 2023, is projected to reach USD 63 billion by 2028, growing at a CAGR of 34.6% during the forecast period. This growth is largely fueled by the increasing demand for GPUs in AI and ML applications. For instance, training a complex Generative AI model like OpenAI's GPT-3, which has 175 billion parameters, is inconceivable without the parallel processing power of GPUs. With model sizes roughly doubling every 3.4 months (by comparison, Moore’s Law had a 2-year doubling period) based on OpenAI analysis, this metric has grown by more than 300,000x (a 2-year doubling period would yield only a 7x increase) since 2012.

NVIDIA GPUs promise to deliver performance increases of 10x to 100x to reduce training time to minutes instead of hours–while outpacing the performance of traditional computing with x86-based CPUs alone. But to enable next-generation AI capabilities, continuous advancement of GPU hardware remains imperative.

Image Source: https://www.nvidia.com/docs/IO/43399/tesla-brochure-12-lr.pdf

However, climate change necessitates that future AI/ML progress must be sustainable. Next-gen AI accelerators will focus on optimised power efficiency as many current AI accelerators are power-hungry, consuming hundreds of watts, which creates thermal and energy costs/efficiency issues especially when scaled up. Top cloud GPU providers like Hyperstack already run at peak efficiency with its equipment being over 20x more energy-efficient than traditional computing.

Emerging Trends

With the rise of GPU usage in AI/ML workloads, we can expect to witness exciting trends such as:

-

Neuromorphic Computing: One of the most awaited trends in AI and ML is neuromorphic computing. This approach seeks to mimic the human brain's neural structure and functioning, potentially leading to more efficient and powerful AI systems. GPUs are expected to be instrumental in this endeavour. Their ability to process tasks in parallel aligns well with the operations of a neuromorphic system, which requires the simultaneous processing of numerous signals.

-

Specialised AI Hardware: While GPUs currently dominate the AI and ML landscape, there is a growing trend towards specialised AI hardware. For example, NVIDIA’s Ampere Architecture A100 GPU is built with 54 billion transistors for tasks like neural network training and inference, offering up to 20X higher performance than the prior generation. Another notable hardware is the NVIDIA DGX SuperPOD with 92 DGX-2H nodes that set a new record by training BERT-Large in just 47 minutes. We can expect more such groundbreaking innovations in GPUs in the coming years.

-

Integration with Cloud and Edge Computing: The future of AI and ML will also see greater integration of GPUs with cloud and edge computing. This integration will enable more efficient data processing and model training, particularly for applications requiring real-time analysis. The global edge AI software market, valued at USD 1.1 billion in 2023, is expected to reach USD 4.1 billion by 2028, growing at a CAGR of 30.5% during the forecast period. GPUs are crucial in this growth, providing the necessary computational power at the edge of networks.

Conclusion

As we have seen, GPUs have been instrumental in enabling breakthroughs across AI and ML that shape the technology today. By supplying the parallel processing capabilities needed to train increasingly complex models with vast datasets in reasonable timeframes, GPUs have fundamentally shifted. Moving forward, the possibilities remain endless for GPU-powered AI/ML innovations across industries to help solve urgent global challenges – from rising cyber threats to drug discovery and genomics

FAQs

Which is the best GPU for AI Training?

The NVIDIA A100 is considered the best GPU for AI training. It offers up to 20x faster than previous generations to accelerate demanding workloads in AI.

Which is the best budget GPU for AI/ML?

Our budget-friendly cloud GPUs for AI/ML workloads like the NVIDIA A100 start at $ 2.20 per hour. You can check our gpu pricing here.

Which is the best machine learning GPU?

The NVIDIA H100 and A100 are considered the best machine learning GPUs.

How do I choose a GPU for AI/ML?

Consider factors like CUDA cores, memory capacity, and memory bandwidth. Assess your specific workload requirements, budget constraints, and compatibility with ML frameworks when making a choice. You can check our cloud GPUs for AI and ML workloads.

Don't let limited computing resources hold you back. Accelerate your AI/ML projects with Hyperstack's high-performance NVIDIA GPUs. We deliver the power and performance you need to train complex models and achieve groundbreaking results. Sign up to try them today!

Subscribe to Hyperstack!

Enter your email to get updates to your inbox every week

Get Started

Ready to build the next big thing in AI?