TABLE OF CONTENTS

- What is Mistral Codestral Mamba?

- Architecture Comparison of Mamba AI Model: Mamba2 vs. Transformer

- Performance Benchmarks of Mistral Codestral Mamba

- Benchmark Observations of Mistral Codestral Mamba

- How to Deploy Mistral Codestral Mamba?

- Mistral Inference SDK

- TensorRT-LLM

- Local Inference

- Raw Weights

- La Plateforme

- Things to Consider for Mistral Codestral Mamba Deployment

- Case of Open Source for Mistral Codestral Mamba

- Similar Reads:

- FAQs

- What is Mistral Codestral Mamba?

- Can Mistral Codestral Mamba be used for commercial projects?

- How does Mistral Codestral Mamba's performance compare to larger models?

- Is Mistral Codestral Mamba suitable for local deployment?

- What programming languages does Mistral Codestral Mamba support?

Updated: 11 Feb 2025

NVIDIA H100 SXM On-Demand

Mistral AI shows no signs of slowing down as its latest model breaks performance records in code generation. With faster response times, tackling extensive codebases and outshining larger models, this 7B parameter model is leading AI-driven code generation.

Our latest article explores how Mistral Codestral Mamba outperform even the most prominent models like Llama 7B. From exceptional benchmarks to model deployment, we’ve got all covered. Let’s get started!

What is Mistral Codestral Mamba?

Mistral Codestral Mamba is Mistral AI's latest model for code generation and language models. Named as a tribute to Cleopatra, it is a Mamba2 language model specialised in code generation. Codestral Mamba is built on the Mamba architecture, which offers several key advantages over conventional Transformer models.

Architecture Comparison of Mamba AI Model: Mamba2 vs. Transformer

Mamba2 and Transformer models represent two distinct approaches to sequence modelling in deep learning. While Transformers have become the standard for many large language models (LLMs), Mamba2 introduces a novel architecture based on state space models (SSMs) that addresses some of the limitations inherent in Transformer models.

|

Feature |

Transformer |

Mamba2 |

|

Architecture Type |

Attention-based |

State Space Model (SSM) |

|

Core Mechanism |

Self-Attention |

Selective State Space Model (S6) |

|

Efficiency |

Quadratic time complexity with sequence length |

Linear time complexity with sequence length |

|

Context Handling |

Limited by sequence length due to quadratic scaling |

Unbounded context handling |

|

Key Innovations |

Multi-head self-attention, positional encoding |

Selective scan algorithm, hardware-aware algorithm |

|

Training and Inference Speed |

Slower due to quadratic complexity |

Faster due to linear complexity and hardware optimisation |

Codestral Mamba AI Model has been specifically trained to excel in code generation and reasoning tasks. With 7,285,403,648 parameters, it's a substantial model that has been fine-tuned to understand and generate code across various programming languages. The model's design philosophy focuses on being a powerful local code assistant. It can handle in-context retrieval for sequences up to 256K tokens making it capable of understanding and working with extensive code contexts.

Performance Benchmarks of Mistral Codestral Mamba

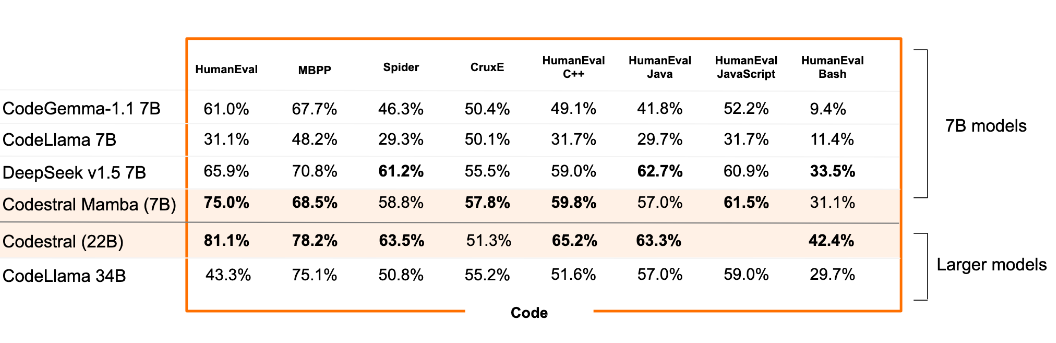

To understand the capabilities of Mistral Codestral Mamba 7B, it's essential to examine its performance across various benchmarks. Look at the HumanEval benchmark below, it tests a model's ability to generate functionally correct code. The benchmarks show that Codestral Mamba (7B) achieves an impressive 75.0% score, outperforming other 7B models like CodeGemma-1.1 7B (61.0%), CodeLlama 34B (31.1%) and DeepSeek v1.5 7B (65.9%). It also surpasses the larger Codestral (22B) model with 81.1%.

Image source: https://mistral.ai/news/codestral-mamba/

To go further in detail, let’s break the benchmarks for Mistral Codestral Mamba:

- MBPP (Mostly Basic Programming Problems): Codestral Mamba AI Model scores 68.5%, which is competitive with other models in its class. It slightly outperforms CodeGemma-1.1 7B (67.7%) and is close to DeepSeek v1.5 7B (70.8%).

- Spider benchmark for SQL query generation: Codestral Mamba AI Model achieves 58.8%, showing strong performance in database-related tasks. This score is higher than CodeGemma-1.1 7B (46.3%) and CodeLlama 34B (29.3%), demonstrating its versatility across different programming domains.

- CruxE: Codestral Mamba scores 57.8%, indicating solid performance in cross-task code generation. It outperforms CodeGemma-1.1 7B (50.4%) and is on par with CodeLlama 34B (50.1%).

- C++: Codestral Mamba achieves 59.8%, outperforming other 7B models.

- Java: Codestral Mamba scores 57.0%, consistently performing across different programming languages.

- JavaScript: Codestral Mamba reaches 61.5%, demonstrating strong capabilities in web development scenarios.

- Bash scripting: Codestral Mamba scores 31.1%, which, while lower than some other benchmarks, is still competitive within its class.

Benchmark Observations of Mistral Codestral Mamba

We have observed several key insights on the benchmarks of Codestral Mamba 7B:

- Despite being a 7B parameter model, Codestral Mamba often outperforms or matches larger models, including some 22B and 34B variants.

- The model depicts exceptional performance across various programming languages and tasks, from basic coding problems to more complex database queries.

- The high scores achieved with fewer parameters highlight the effectiveness of the Mamba architecture in code-related tasks.

- While excelling in certain areas, Codestral Mamba maintains a balanced performance profile across different benchmarks, suggesting its utility as a general-purpose code assistant.

- Given that this is likely an early version of the model, there's potential for even better performance with further refinements and training.

How to Deploy Mistral Codestral Mamba?

Deploying Codestral Mamba 7B is designed to be flexible, catering to various use cases and infrastructure setups. The Mistral code AI model has provided several options for integrating the Codestral Mamaba model into your development environment:

Mistral Inference SDK

You can deploy Codestral Mamba 7B through the mistral-inference SDK. This software development kit is optimised to work with Mamba models and leverages the reference implementation from the official Mamba GitHub repository.

TensorRT-LLM

If you want to deploy on NVIDIA GPUs with maximum performance, Codestral Mamba 7B is compatible with TensorRT-LLM. This toolkit optimises the model for inference on NVIDIA hardware that offers significant speed improvements.

Local Inference

While not immediately available, support for Codestral Mamba in llama.cpp is anticipated. This will allow for efficient local inference, particularly useful for developers who need to run the model on their machines or in environments with limited cloud access.

Raw Weights

For researchers and developers who want to implement custom deployment solutions, the raw weights of Codestral Mamba 7B are available for download from HuggingFace.

La Plateforme

Mistral code AI has made Codestral Mamba 7B available on their “Le Plateforme” under the identifier codestral-mamba-2407 for easy testing. This allows you to quickly try the model without setting up your infrastructure.

Things to Consider for Mistral Codestral Mamba Deployment

While the latest Mistral code model shows exceptional performance, it's crucial to consider some important points to maximise its potential.

-

- Hardware Requirements: While Mamba models are generally more efficient than Transformers, Codestral Mamba still requires significant computational resources. You can start with NVIDIA RTX A6000 but we recommend NVIDIA L40, NVIDIA A100 for inference and NVIDIA H100 PCIe and NVIDIA H100 SXM for finetuning. Check out NVIDIA H100 80GB SXM5 performance benchmarks on Hyperstack to make an informed decision.

- Batching: You may implement efficient batching strategies to maximise throughput, especially when dealing with multiple simultaneous requests.

- Fine-tuning: You must consider fine-tuning the model on your specific codebase or domain for even better performance.

- Monitoring: Set up proper monitoring and logging to track the model's performance and usage in your environment.

Case of Open Source for Mistral Codestral Mamba

The decision of Mistral Codestral Mamba to release under the Apache 2.0 license is a huge win for the open-source community. The Apache 2.0 license offers several key benefits:

- Freedom to Use and Modify: Developers and researchers can freely use, modify and distribute Codestral Mamba without restrictive conditions. This approach encourages experimentation and adaptation of the model for various use cases.

- Patent Protection: The license includes a patent grant that provides users with protection against patent claims from contributors to the software. This is crucial in AI where patent concerns can sometimes hinder open collaboration.

- Commercial Use: Codestral 22B is available under a commercial license for self-deployment. This means businesses can integrate Codestral Mamba into their products or services without fear of licensing conflicts.

Get started with Hyperstack to Accelerate AI Innovation!

Similar Reads:

- Phi-3: Microsoft's Latest Open AI Small Language Model

- OpenAI Releases Latest AI Flagship Model GPT- 4o

FAQs

What is Mistral Codestral Mamba?

Mistral Codestral Mamba is Mistral AI's latest model for code generation and language models. It is named as a tribute to Cleopatra and combines the power of the Mamba2 architecture with specialised training for code-related tasks

Can Mistral Codestral Mamba be used for commercial projects?

Yes, Codestral Mamba is released under the Apache 2.0 license, which allows commercial use.

How does Mistral Codestral Mamba's performance compare to larger models?

Despite being a 7B parameter model, Codestral Mamba models often outperforms or matches larger 22B and 34B models in coding benchmarks.

Is Mistral Codestral Mamba suitable for local deployment?

Yes, through Mamba models local deployment is possible, with upcoming support in llama.cpp expected to facilitate efficient local inference.

What programming languages does Mistral Codestral Mamba support?

Codestral Mamba models shows strong performance across various languages, including C++, Java, JavaScript, and Bash.

Subscribe to Hyperstack!

Enter your email to get updates to your inbox every week

Get Started

Ready to build the next big thing in AI?