Benefits of Cloud GPU for LLM

Large language models are transforming the way we interact with language across diverse fields like machine translation, chatbot development and creative content generation. Rent GPU for LLM to train massive language models faster.

Unmatched Performance

Accelerate your LLM training and inference with the raw power of NVIDIA GPUs Tensor Core architectures.

Pre-Configured Environments

Get started quickly with pre-configured cloud LLM environments equipped with optimised software stacks and libraries, reducing setup time and complexity.

Scalability On-Demand

Scale your LLM resources up or down on demand to match your project's needs. Avoid the upfront costs and maintenance burden of managing your hardware infrastructure.

Cost-Effective

Choose from our pay-for-what-you-use pricing model to perfectly match your usage patterns and optimize your cloud GPU costs. Best for experimental projects or those with fluctuating resource demands.

Effortless Collaboration

Facilitate seamless collaboration on LLM projects with team members anywhere in the world. Share resources securely and work together efficiently to accelerate progress and innovation.

Real-Time Analytics

Accelerated Data Analytics

Deep Video Analytics

LLM Solutions

Personalised Learning:

LLMs personalise educational content and adapt to individual learning styles, creating engaging and effective learning experiences. It improves student engagement and knowledge retention, learning pathways, adaptive assessments, and accessible education for diverse needs.

Key Applications: Personalized tutoring, adaptive learning platforms, language learning apps, disability support in education.

Legal Research & Automation:

LLMs analyse vast legal documents, identify relevant information, and generate summaries and drafts, streamlining legal workflows. It increases research efficiency, reduces legal costs, improves accuracy and compliance, and faster case preparation.

Key Applications: Contract review and analysis, legal due diligence, case preparation, legal document generation, legal research automation.

Creative Content Generation:

LLMs generate unique and engaging content formats like poems, scripts, musical pieces, and marketing copy, sparking creative ideas. It helps in overcoming creative blocks, exploring diverse content styles, personalising marketing campaigns and automating content creation tasks.

Key Applications: Marketing campaigns, social media content creation, advertising copywriting, product descriptions, music composition, and scriptwriting.

Cybersecurity & Threat Detection:

LLMs analyse security logs and detect anomalies, identifying and mitigating cyber threats before they escalate. It helps in proactive threat detection and prevention, improves security posture, and reduces cyberattacks and data breaches.

Key Applications: Cybersecurity threat analysis, malware detection, phishing email identification, intrusion detection systems, anomaly detection in network traffic.

Assistive Technologies:

LLMs power real-time translation tools, text-to-speech and speech-to-text applications, facilitating communication and accessibility. It improves communication for individuals with disabilities, language barriers, access to information and resources, and inclusive technology solutions.

Key Applications: Real-time translation for deaf and hard-of-hearing individuals, text-to-speech and speech-to-text tools, assistive communication devices, and accessibility features in software and websites.

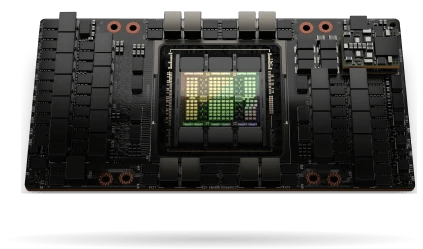

GPUs We Recommend for LLM

Achieve breakthrough results in your LLM projects with NVIDIA’s cutting-edge LLM GPU technology. Rent GPU for LLM on Hyperstack.

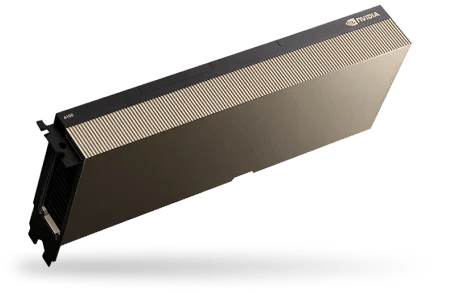

NVIDIA A100

Unlock the potential of A100s for large language model training with high memory bandwidth for fast data transfer during LLM operations.

NVIDIA L40

Experience 5x higher inference performance than third-generation tensor cores with NVIDIA L40 for LLM workloads.

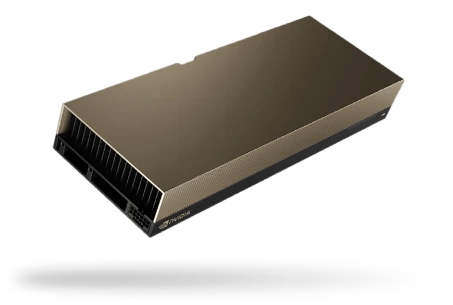

NVIDIA H100 SXM

Experience 30x faster processing with the H100 SXM GPU, available only on the Hyperstack Supercloud.

Frequently Asked Questions

We build our services around you. Our product support and product development go hand in hand to deliver you the best solutions available.

Can I use multiple LLM GPUs for LLM tasks?

Yes, you can utilise multiple cloud GPUs for LLM tasks to significantly accelerate training or inference for extensive workloads.

Why rent GPU for LLM?

Using a cloud GPU for LLM provides access to high-performance computing resources without the need for expensive hardware investments. This makes it easier for you to handle the significant computational requirements of LLMs. Cloud GPUs also offer scalability, allowing you to adjust computational resources as needed. This flexibility is important for managing varying workloads and complex model training.

Which cloud providers offer GPU instances for Large Language Models?

Hyperstack Cloud offers a range of GPU-powered instances ideal for training and deploying large language models. We provide powerful cloud GPUs like the NVIDIA A100 and H100 SXM with high computing performance needed for natural language processing models like BERT and GPT-3.

How can I select the right GPU instance for my Large Language Model?

Choosing the right GPU for your LLM depends on several factors:

- Memory: Opt for GPUs with ample VRAM to accommodate large models and datasets.

- Bandwidth: High memory bandwidth is essential for faster data transfer between the GPU and memory.

- Scalability: Ensure the GPU can scale in multi-GPU setups for distributed training.

See What Our Customers Think...

“This is the fastest GPU service I have ever used.”

Anonymous user

You guys rock!! You have NO IDEA how badly I need a solid GPU cloud provider. AWS/Azure are literally only for enterprise clients at this point, it's impossible to build a highly technical startup and get hit with their ridiculous egrees fees. You guys have excellent latency all the way down here to Atlanta from CA.

By far the most important aspect of a cloud provider, only second to cost/quality ratio, is their API. The UI/UX of the console is extremely well designed and I appreciate the quality. So, I’ll be diving into your API deeply. Other GPU providers don’t offer a programmatic way of creating OS images, so the fact that you do is key for me.

Anonymous user