TABLE OF CONTENTS

Updated: 11 Feb 2025

NVIDIA H100 SXM On-Demand

We're thrilled to announce the upcoming addition of the NVIDIA H100-80GB-SXM5 GPU to our Hyperstack On-Demand offerings. This addition joins our existing NVIDIA H100-80GB-PCIe and H100-80GB-PCIe-NVLink options aiming to expand our high-performance computing solutions to meet the most demanding AI and HPC workloads.

Decoding the H100 Family: PCIe, PCIe-NVLink and SXM5

While all members of the H100 GPU family share HPC NVIDIA Hopper architecture solutions, it's crucial to understand the distinctions between the PCIe, PCIe-NVLink and SXM5 variants:

- NVIDIA H100-80GB-PCIe: The standard PCIe card utilises a PCIe 5.0 x16 interface.

- NVIDIA H100-80GB-PCIe-NVLink: A PCIe card with additional NVLink connectivity for enhanced GPU-to-GPU communication (GPUs 0-1, 2-3, 4-5, 6-7).

- NVIDIA H100-80GB-SXM5: The server module (SXM) form factor offers superior performance with more CUDA cores (16,896 vs. 14,592) and improved memory bandwidth (3.35 TB/s vs. 2 TB/s). This variant is typically found in HGX and DGX systems.

IMPORTANT: It's worth noting that there's sometimes confusion in terminology with the NVIDIA H100-80GB-PCIe-NVLink occasionally mislabeled as SXM5. However, these are distinct configurations with important differences.

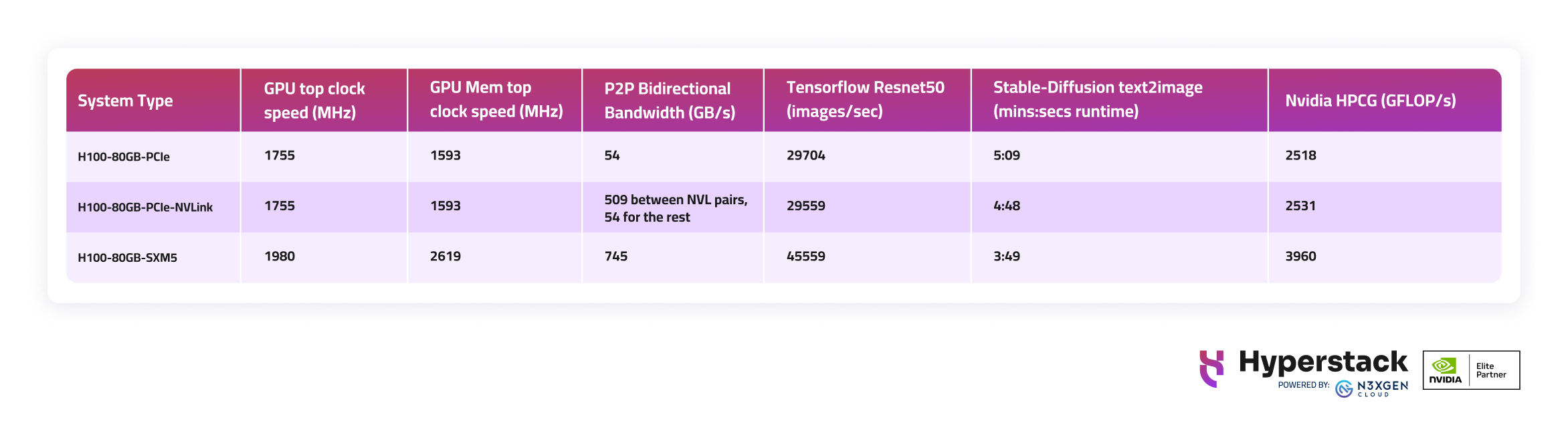

Performance Benchmarks Comparison

To illustrate the performance differences between these variants, let's examine some key metrics from our internal benchmarks:

Why SXM5 Stands Out

The NVIDIA H100-80GB-SXM5 isn't just an incremental upgrade, it offers exceptional performance and capabilities:

- Unmatched Performance: Benchmarks reveal that the gap between SXM5 and PCIe-NVLink is substantially larger than the difference between PCIe-NVLink and standard PCIe. This translates to a noticeable boost in real-world applications.

- Superior Scalability: With a staggering P2P bandwidth of 745 GB/s (compared to 509 GB/s for PCIe-NVLink and 54 GB/s for standard PCIe), the SXM5 variant excels in multi-GPU configurations. This makes it the ideal choice for large-scale distributed training and inference tasks that demand seamless GPU communication.

Our latest NVIDIA H100-80GB-SXM5 offerings aim to provide cutting-edge solutions for your most computationally intensive workloads. If you are pursuing AI research, tackling complex scientific simulations or processing massive datasets, the SXM5 variant offers the raw power and scalability to drive your projects. Stay tuned for more such performance benchmarks.

Accelerate your AI with Hyperstack's high-end GPUs. Sign up now!

Subscribe to Hyperstack!

Enter your email to get updates to your inbox every week

Get Started

Ready to build the next big thing in AI?

.png)

.png?width=662&height=610&name=HS%20G200vsH100%20Sora-03%20(1).png)

4 Apr 2025

4 Apr 2025