TABLE OF CONTENTS

Updated: 7 Apr 2025

NVIDIA H100 GPUs On-Demand

In our latest tutorial, we explore how to deploy and use Llama 4 Maverick on Hyperstack. From setting up your environment to running multimodal tasks, we guide you through each step to help you get started with Meta’s most advanced open-weight model.

What is Llama 4 Maverick?

Llama 4 Maverick is a 17B active and 400B total parameters AI model developed by Meta as part of its Llama 4 series. It is a natively multimodal system capable of processing and integrating various data types, including text, images, video, and audio. Designed to outperform models like GPT-4o and Gemini 2.0, Llama 4 Maverick offers advanced capabilities in handling complex tasks across multiple modalities.

Features of Llama 4 Maverick

The features of Meta Llama 4 Maverick include:

-

Multimodal Capabilities: Processes and integrates text, images, video, and audio data. The native multimodal also supports 1M context length.

-

High Parameter Count: Utilises 17 billion active and 400B total parameters with a mixture of 128 experts.

-

Open-Weight Model: Shares pre-trained parameters while keeping training data and architecture proprietary.

- Best-in-class Performance: Llama 4 Maverick delivers exceptional performance relative to cost, with its experimental chat version achieving an ELO score of 1417 on LMArena.

-

Optimised Resource Usage: Employs a mixture of expert (MoE) architecture for efficient processing.

-

Reduced Bias: Shows improved balance and neutrality in responses to contentious topics.

Steps to Deploy Llama 4 Maverick on Hyperstack

Now, let's walk through the step-by-step process of deploying Llama 4 Maverick on Hyperstack.

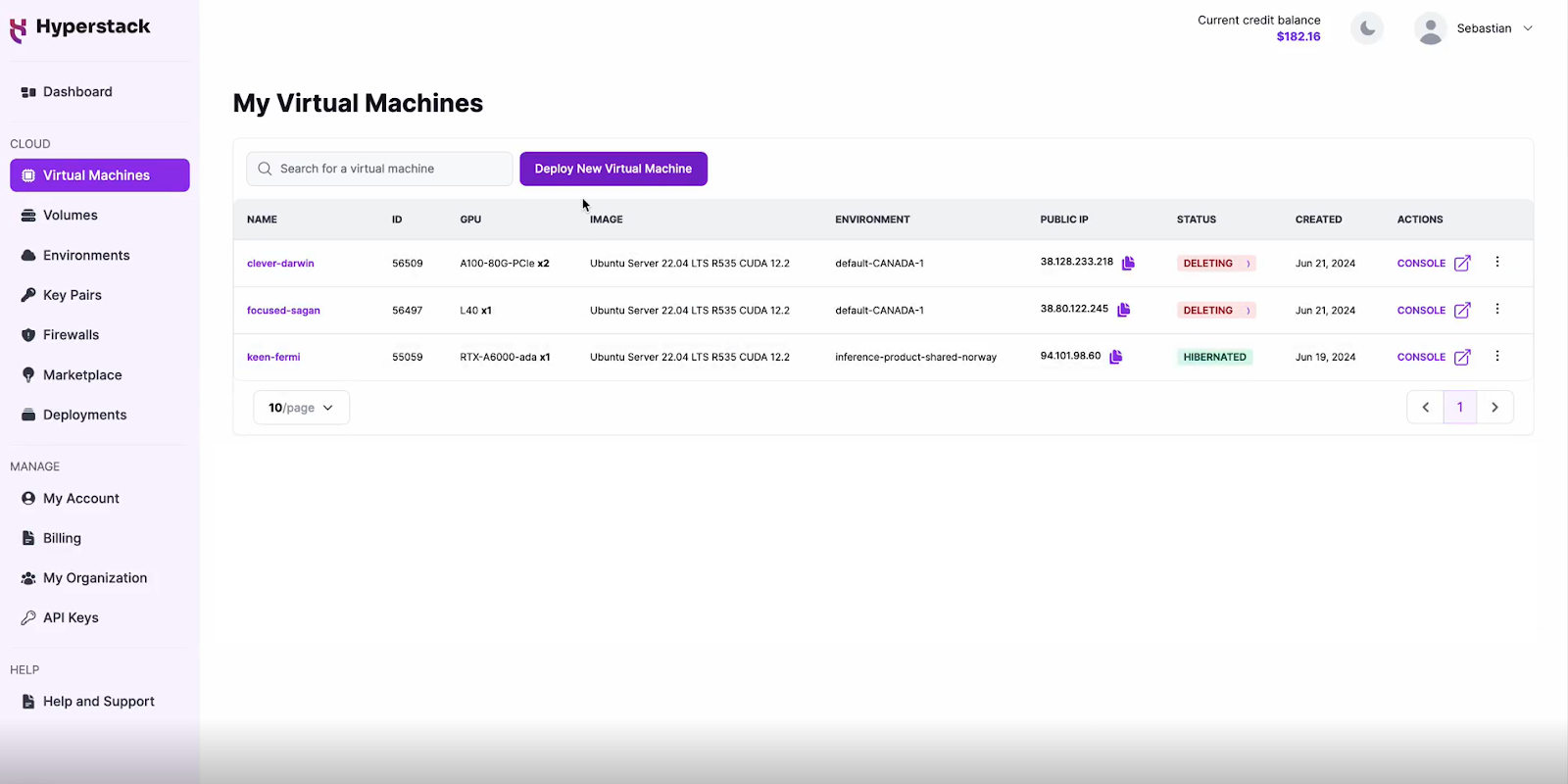

Step 1: Accessing Hyperstack

- Go to the Hyperstack website and log in to your account.

- If you're new to Hyperstack, you'll need to create an account and set up your billing information. Check our documentation to get started with Hyperstack.

- Once logged in, you'll be greeted by the Hyperstack dashboard, which provides an overview of your resources and deployments.

Step 2: Deploying a New Virtual Machine

Initiate Deployment

- Look for the "Deploy New Virtual Machine" button on the dashboard.

- Click it to start the deployment process.

Select Hardware Configuration

- In the hardware options, choose the "8x H100-80G-SXM5" flavour.

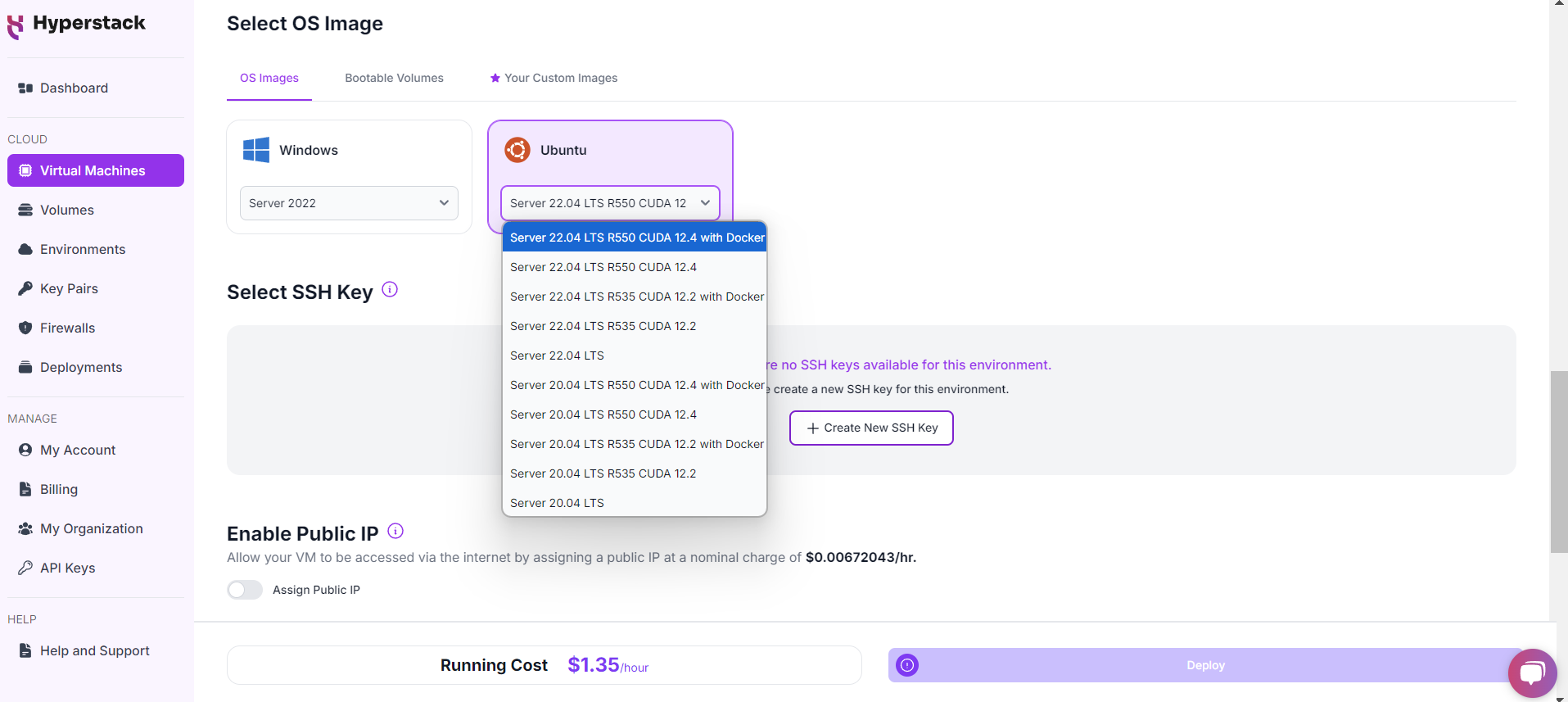

Choose the Operating System

- Select the "Ubuntu Server 22.04 LTS R550 CUDA 12.4 with Docker".

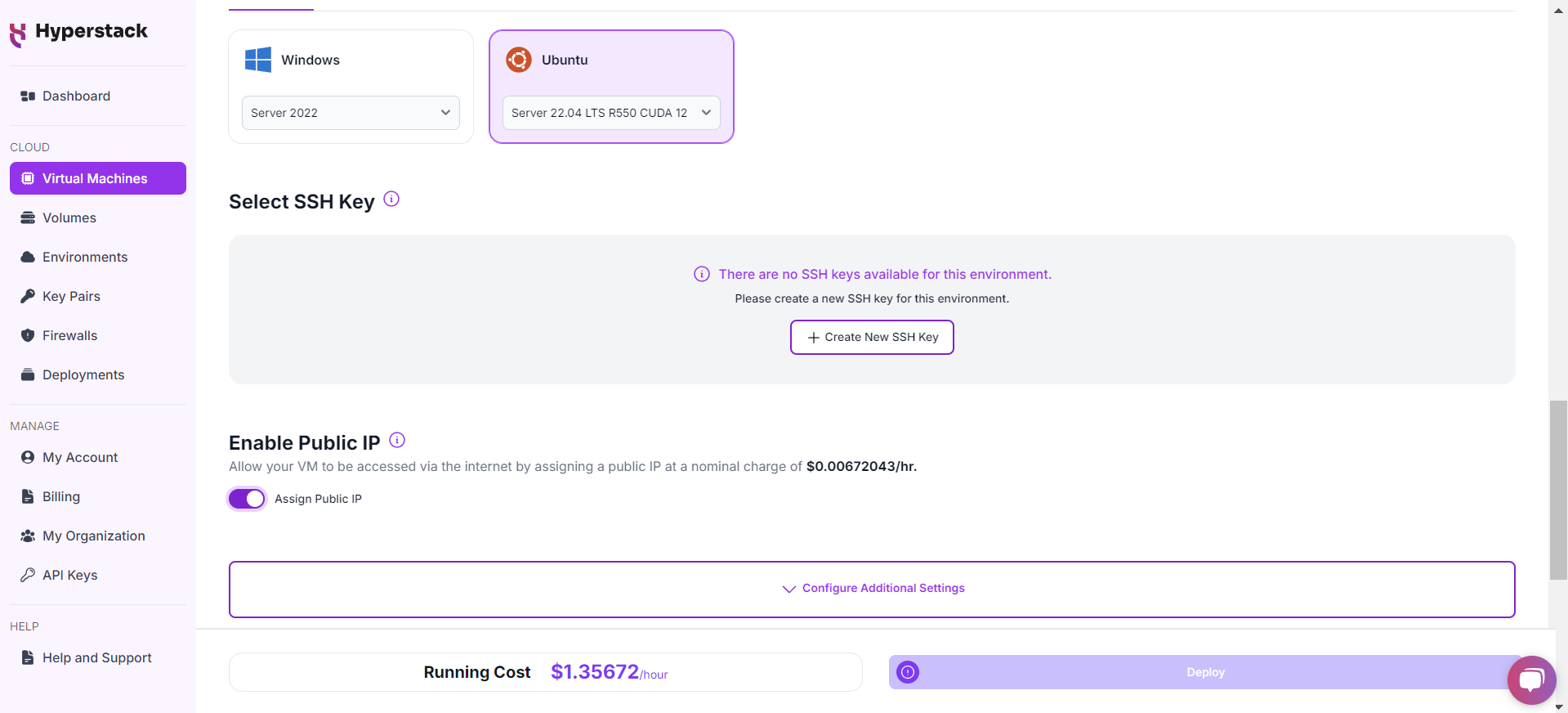

Select a keypair

- Select one of the keypairs in your account. Don't have a keypair yet? See our Getting Started tutorial for creating one.

Network Configuration

- Ensure you assign a Public IP to your Virtual machine.

- This allows you to access your VM from the internet, which is crucial for remote management and API access.

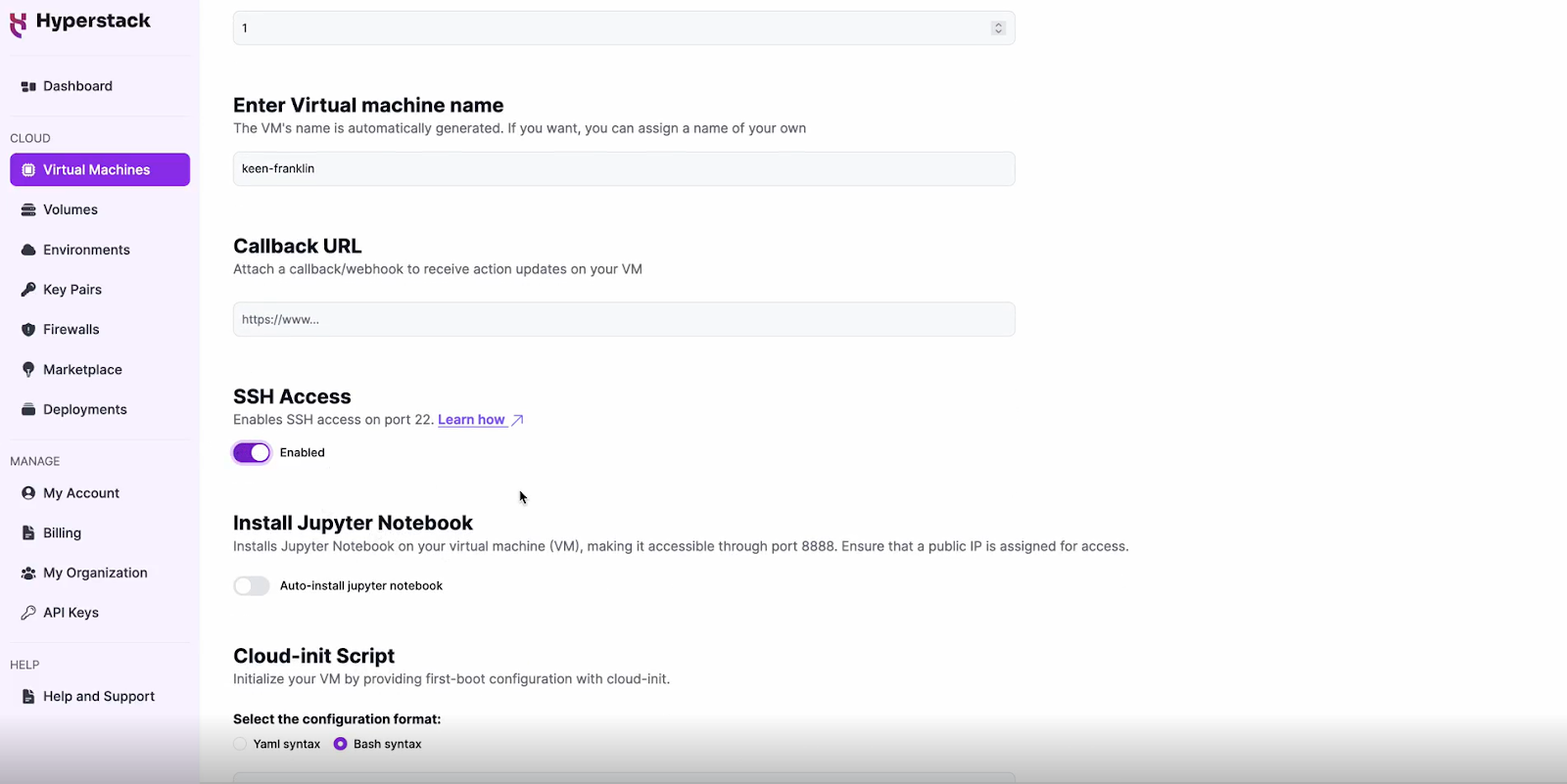

Enable SSH Access

- Make sure to enable an SSH connection.

- You'll need this to connect and manage your VM securely.

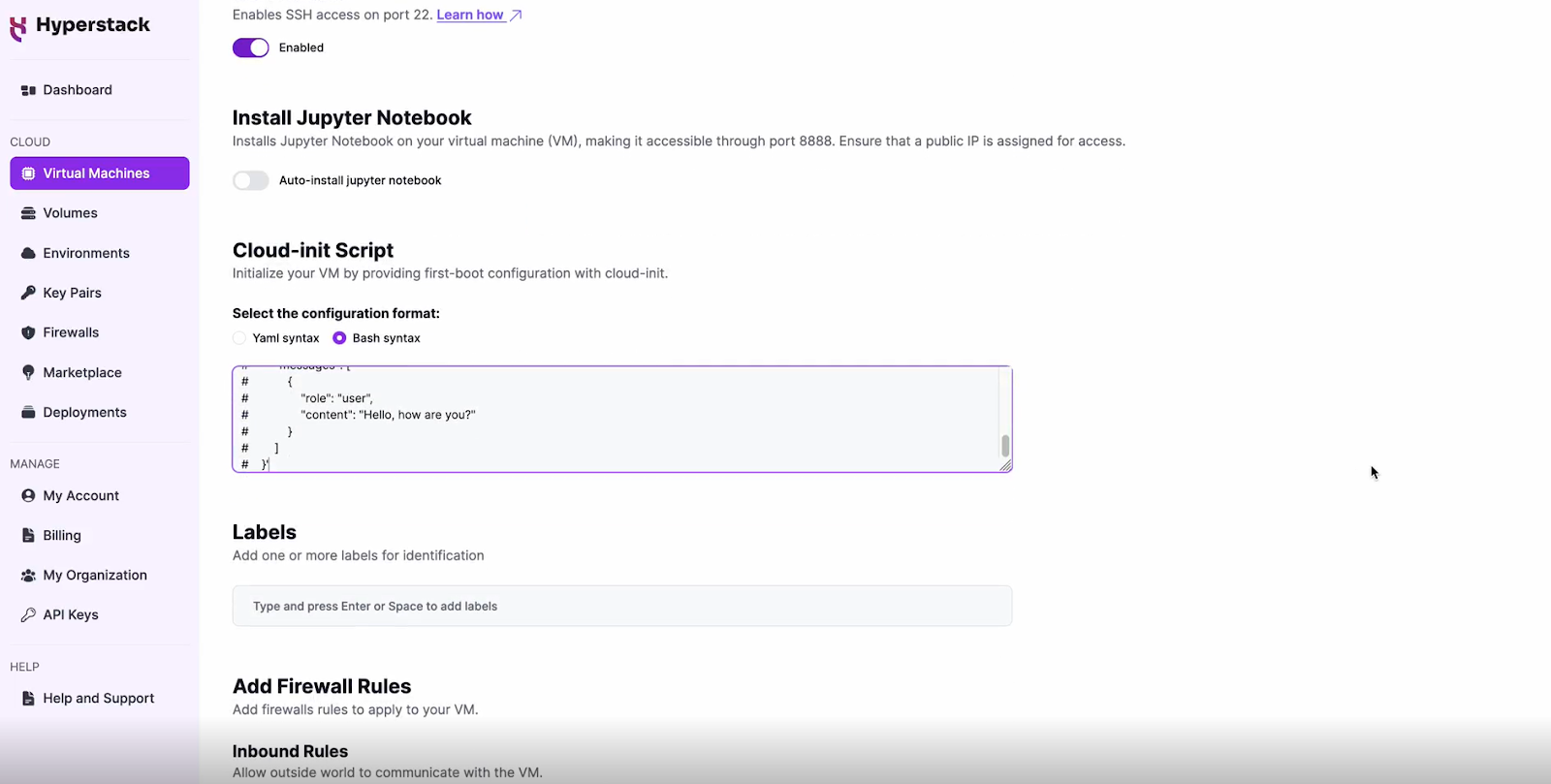

Configure Additional Settings

- Look for an "Additional Settings" or "Advanced Options" section.

- Here, you'll find a field for cloud-init scripts. This is where you'll paste the initialisation script. Click here to get the cloud-init script!

To use the Llama 4 Maverick you need to:

- Request access here: https://huggingface.co/meta-llama/Llama-4-Maverick-17B-128E-Instruct-FP8

- Create a HuggingFace token to access the gated model, see more info here.

- Replace line 12 of the attached cloud-init file with your HuggingFace token.

Please note: this cloud-init script will only enable the API once for demo-ing purposes. For production environments, consider using containerization (e.g. Docker), secure connections, secret management, and monitoring for your API.

Review and Deploy

- Double-check all your settings.

- Click the "Deploy" button to launch your virtual machine.

Please Note: This script will deploy the model with 430k context size. For the full context size (1M tokens) we suggest a multi-node setup which is out of scope for this tutorial.

Step 3: Initialisation and Setup

After deploying your VM, the cloud-init script will begin its work. This process typically takes about 5-10 minutes. During this time, the script performs several crucial tasks:

- Dependencies Installation: Installs all necessary libraries and tools required to run Llama 4 Maverick.

- Model Download: Fetches the Llama 4 Maverick model files from the specified repository.

While waiting, you can prepare your local environment for SSH access and familiarise yourself with the Hyperstack dashboard.

Step 4: Accessing Your VM

Once the initialisation is complete, you can access your VM:

Locate SSH Details

- In the Hyperstack dashboard, find your VM's details.

- Look for the public IP address, which you will need to connect to your VM with SSH.

Connect via SSH

- Open a terminal on your local machine.

- Use the command ssh -i [path_to_ssh_key] [os_username]@[vm_ip_address] (e.g: ssh -i /users/username/downloads/keypair_hyperstack ubuntu@0.0.0.0.0)

- Replace username and ip_address with the details provided by Hyperstack.

Interacting with Llama 4 Maverick

To access and experiment with Llama 4 Maverick, SSH into your machine after completing the setup. If you are having trouble connecting with SSH, watch our recent platform tour video (at 4:08) for a demo. Once connected, use this API call on your machine to start using the Llama 4 Maverick:

MODEL_NAME="meta-llama/Llama-4-Maverick-17B-128E-Instruct-FP8"

curl -X POST http://localhost:8000/v1/chat/completions \

-H "Content-Type: application/json" \

-d '{

"model": "'$MODEL_NAME'",

"messages": [

{

"role": "user",

"content": "Hello, how are you?"

}

]

}'Troubleshooting Llama 4 Maverick

If you are having any issues, please follow the following instructions:

-

SSH into your VM.

-

Check the cloud-init logs with the following command: cat /var/log/cloud-init-output.log

- Use the logs to debug any issues.

Step 5: Hibernating Your VM

When you're finished with your current workload, you can hibernate your VM to avoid incurring unnecessary costs:

- In the Hyperstack dashboard, locate your Virtual machine.

- Look for a "Hibernate" option.

- Click to hibernate the VM, which will stop billing for compute resources while preserving your setup.

Why Deploy Llama 4 Maverick on Hyperstack?

Hyperstack is a cloud platform designed to accelerate AI and machine learning workloads. Here's why it's an excellent choice for deploying Llama 4 Maverick:

- Availability: Hyperstack provides access to the latest and most powerful GPUs such as the NVIDIA H100 on-demand, specifically designed to handle large language models.

- Ease of Deployment: With pre-configured environments and one-click deployments, setting up complex AI models becomes significantly simpler on our platform.

- Scalability: You can easily scale your resources up or down based on your computational needs.

- Cost-Effectiveness: You pay only for the resources you use with our cost-effective cloud GPU pricing.

- Integration Capabilities: Hyperstack provides easy integration with popular AI frameworks and tools.

Explore More Tutorials on Llama

New to Hyperstack? Log in to Get Started with Our Ultimate Cloud GPU Platform Today!

FAQs

What is Llama 4 Maverick?

Llama 4 Maverick is a 17-billion active parameter AI model developed by Meta, featuring multimodal capabilities to process text, images, video and audio.

How does Llama 4 Maverick differ from Llama 3?

It offers enhanced multimodal processing, a higher parameter count, and improved performance in coding and reasoning tasks compared to earlier models.

What are the use cases for Llama 4 Maverick?

Its multimodal capabilities make it suitable for applications requiring the integration of text, images, video, and audio, such as advanced conversational AI, content creation and data analysis.

How does Llama 4 Maverick perform?

Llama 4 Maverick outperforms GPT-4o and Gemini 2.0 Flash in various benchmarks and matches DeepSeek v3 in reasoning and coding with fewer parameters. It offers top performance-to-cost efficiency, scoring an ELO of 1417 on LMArena.

Where can I access the Llama 4 Maverick model?

You can easily access the latest Llama 4 Maverick models on llama.com and Hugging Face.

Subscribe to Hyperstack!

Enter your email to get updates to your inbox every week

Get Started

Ready to build the next big thing in AI?

26 Mar 2025

26 Mar 2025