TABLE OF CONTENTS

NVIDIA H100 GPUs On-Demand

In our latest tutorial, we will show you how to deploy DeepSeek-R1 on Hyperstack. With Hyperstack’s high-performance cloud GPU infrastructure, you’ll have DeepSeek-R1 up and running seamlessly. Whether you're looking to fine-tune its advanced reasoning capabilities or integrate it into your next big project, Hyperstack makes the process simple, scalable and cost-efficient.

Let's get started!

What is DeepSeek-R1?

DeepSeek-R1 is a 671B parameter Mixture-of-Experts open source language model with 37B activated per token and 128K context length. It delivers high performance through innovative training strategies, outperforming open-source models while remaining cost-efficient and scalable. DeepSeek-R1 combines Multi-head Latent Attention (MLA) with DeepSeekMoE to improve inference speed and training efficiency. It also introduced an auxiliary-loss-free load-balancing strategy and supports Multi-Token Prediction (MTP) for improved performance.

What are the Key Features of DeepSeek-R1?

DeepSeek-R1 is an open-source AI model designed to enhance logical reasoning and problem-solving capabilities. Its key features include:

- Advanced Reasoning Capabilities: DeepSeek-R1 excels in logical inference, mathematical reasoning, and real-time problem-solving, outperforming other models in tasks requiring structured thinking.

- Reinforcement Learning Training: The model employs reinforcement learning techniques, allowing it to develop advanced reasoning skills and generate logically sound responses.

- Open-Source: Released under the MIT license, DeepSeek-R1 is freely available for use, modification and redistribution.

- Distilled Model Variants: DeepSeek-R1 includes distilled versions with parameter counts ranging from 1.5B to 70B, catering to various computational requirements and use cases.

How to Use DeepSeek-R1?

Now, let's walk through the step-by-step process of deploying DeepSeek-R1 on Hyperstack.

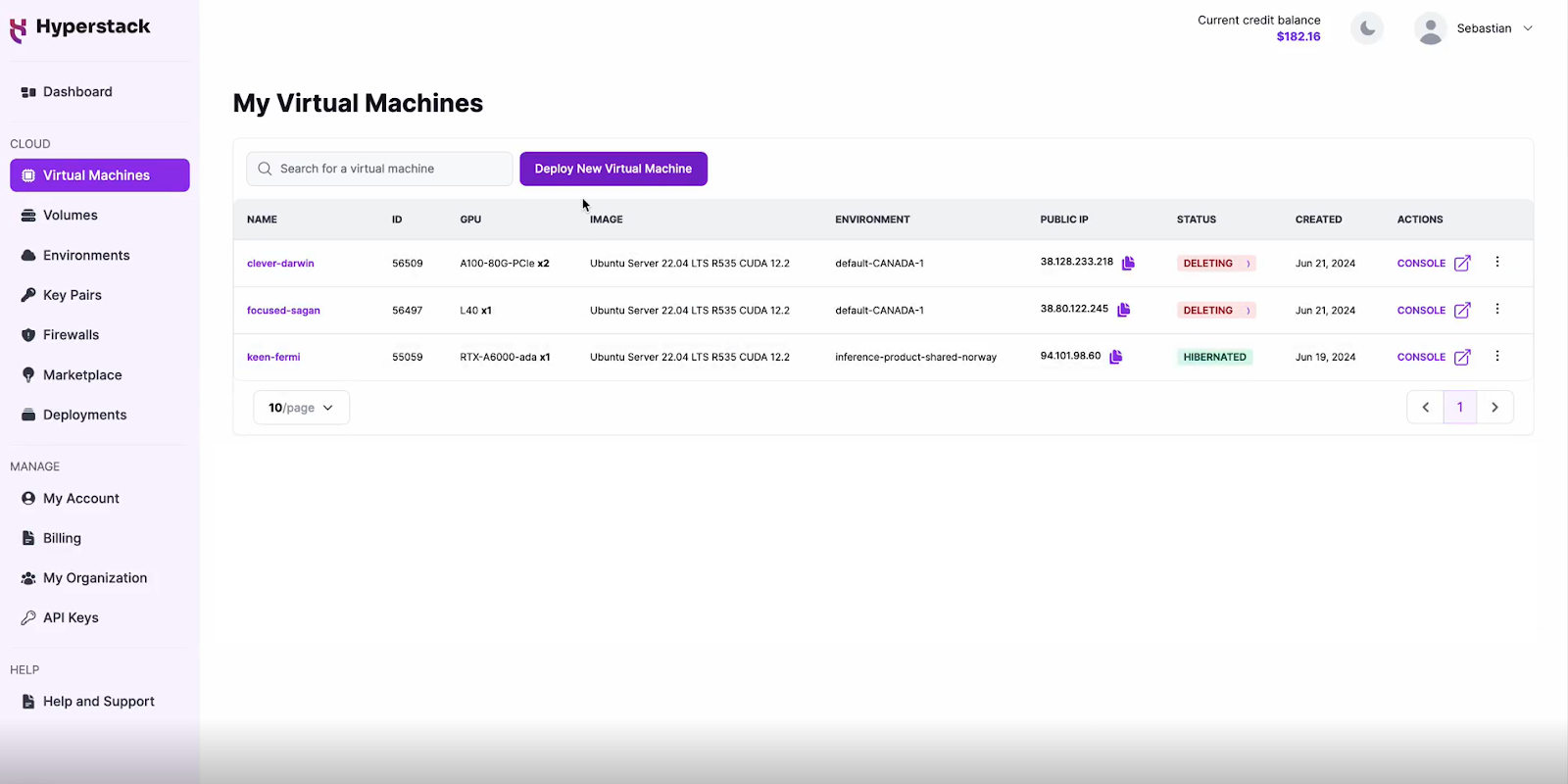

Step 1: Accessing Hyperstack

- Go to the Hyperstack website and log in to your account.

- If you're new to Hyperstack, you must create an account and set up your billing information. Check our documentation to get started with Hyperstack.

- Once logged in, you'll be greeted by the Hyperstack dashboard, which provides an overview of your resources and deployments.

Step 2: Deploying a New Virtual Machine

Initiate Deployment

- Look for the "Deploy New Virtual Machine" button on the dashboard.

- Click it to start the deployment process.

Select Hardware Configuration

- Choose the "8xH100-SXM5" flavour. This configuration provides 8 NVIDIA H100 VM Instances, offering superior performance for advanced models like DeepSeek-R1.

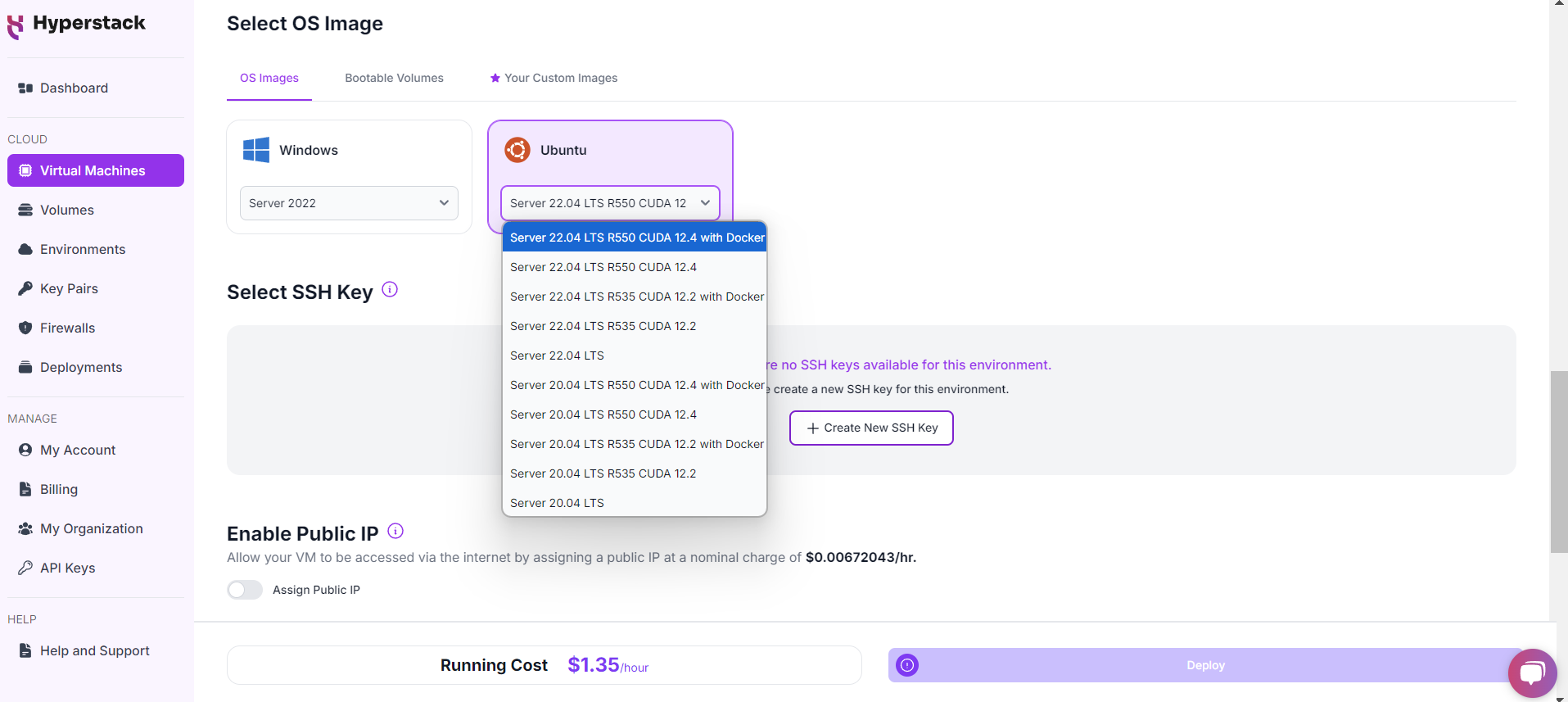

Choose the Operating System

- Select the "Ubuntu Server 22.04 LTS R550 CUDA 12.4 with Docker".

Select a keypair

- Select one of the keypairs in your account. Don't have a keypair yet? See our Getting Started tutorial for creating one.

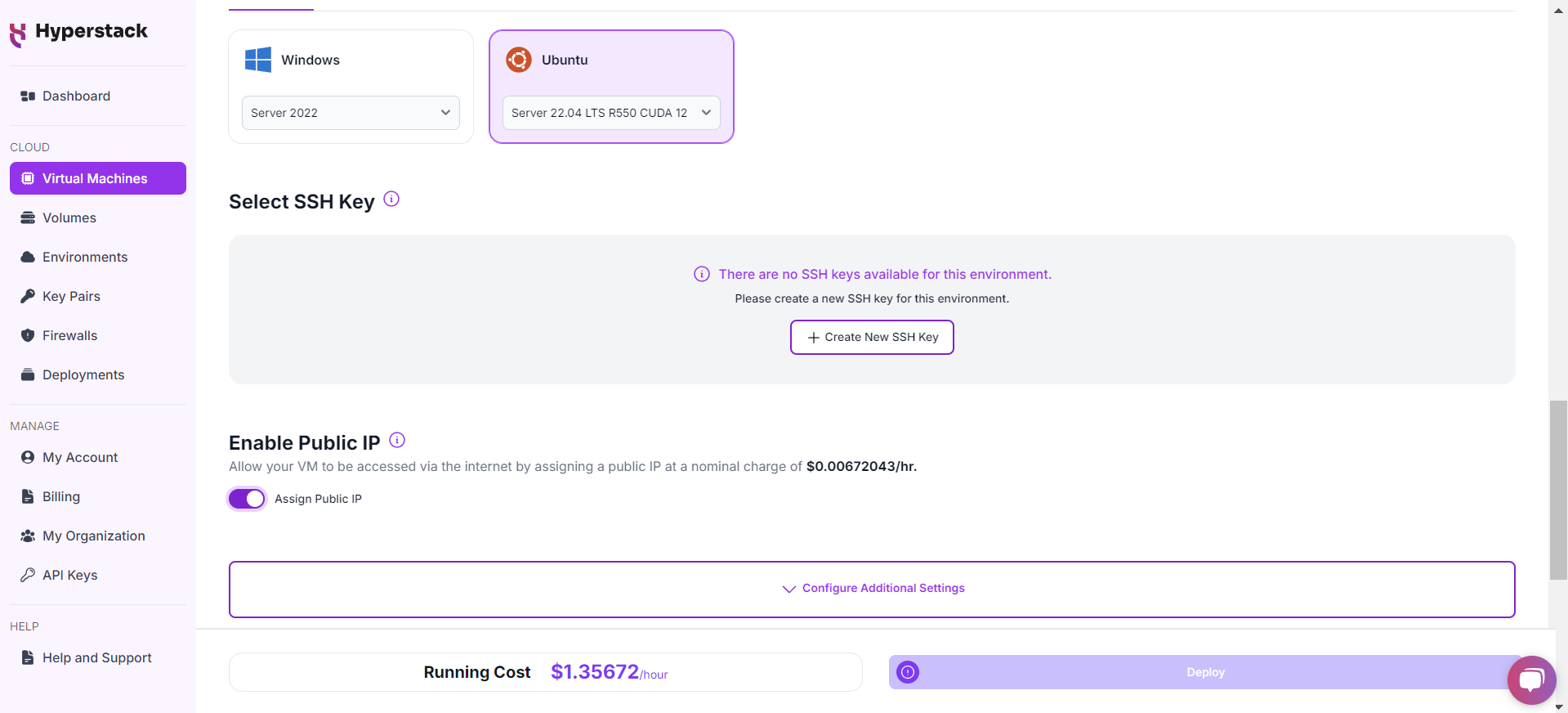

Network Configuration

- Ensure you assign a Public IP to your Virtual machine [See the attached screenshot].

- This allows you to access your VM from the internet, which is crucial for remote management and API access.

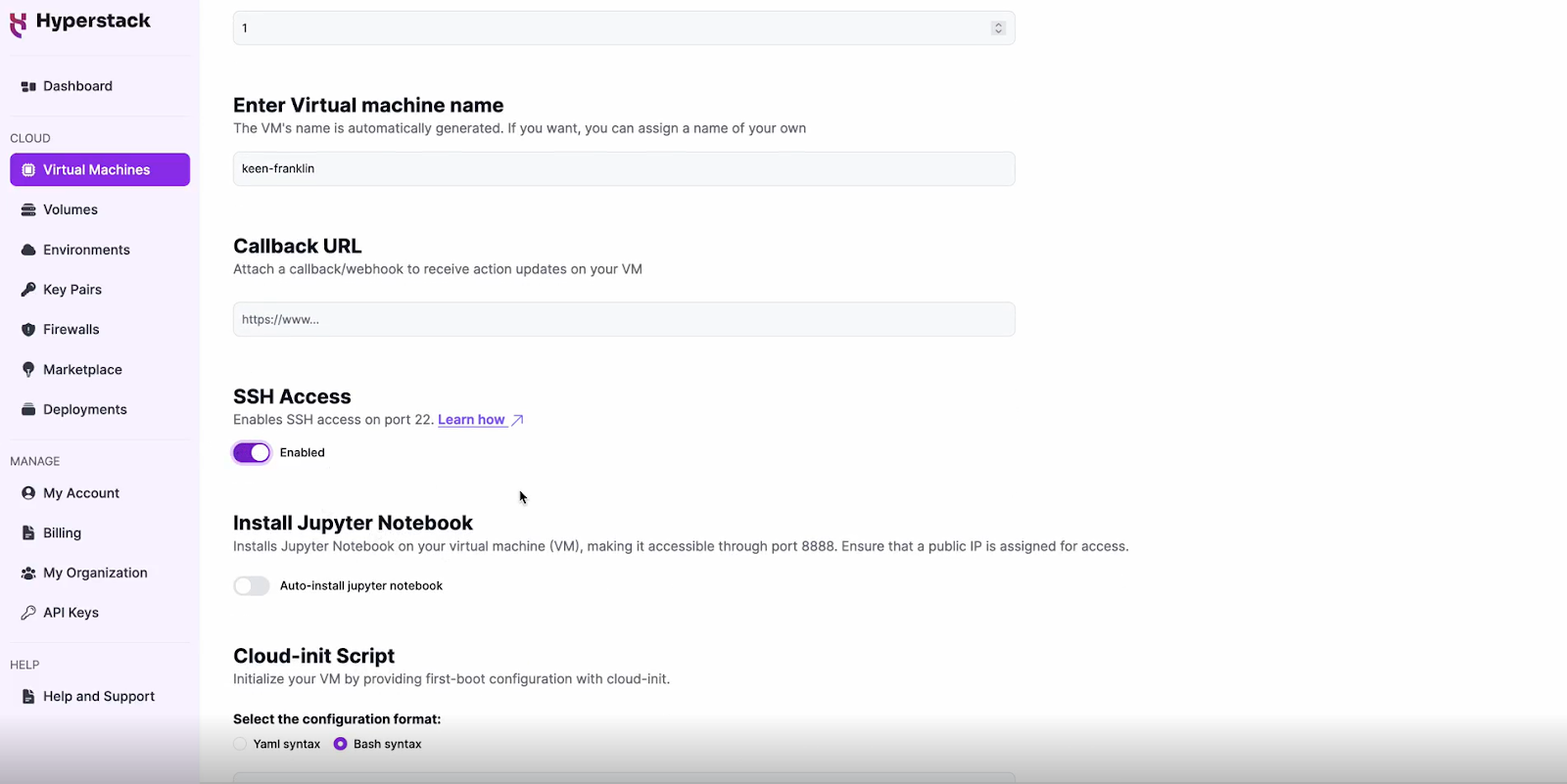

Enable SSH Access

- Make sure to enable an SSH connection.

- You'll need this to securely connect and manage your VM.

Review and Deploy

- Double-check all your settings.

- Click the "Deploy" button to launch your virtual machine.

Step 3: Accessing Your VM

Once the initialisation is complete, you can access your VM:

Locate SSH Details

- In the Hyperstack dashboard, find your VM's details.

- Look for the public IP address, which you will need to connect to your VM with SSH.

Connect via SSH

- Open a terminal on your local machine.

- Use the command ssh -i [path_to_ssh_key] [os_username]@[vm_ip_address] (e.g: ssh -i /users/username/downloads/keypair_hyperstack ubuntu@0.0.0.0.0)

- Replace username and ip_address with the details provided by Hyperstack.

Step 4: Setting up DeepSeek R1 with Open WebUI

- To set up DeepSeek R1, SSH into your machine. If you are having trouble connecting with SSH, watch our recent platform tour video (at 4:08) for a demo. Once connected, use the script below to set up DeepSeek R1 with OpenWebUI.

- Execute the command below to launch open-webui on port 3000.

docker run -d \

-p "3000:8080" \

--gpus=all \

-v /ephemeral/ollama:/root/.ollama \

-v open-webui:/app/backend/data \

--name "open-webui" \

--restart always \

"ghcr.io/open-webui/open-webui:ollama"

3. Execute the following command to start downloading DeepSeek R1 to your machine.

docker exec -it "open-webui" ollama pull deepseek-r1:671b

The above script will download and host the int4 quantised version of DeepSeek-R1 to ensure it fits on a single machine. See this model card for more information.

Interacting with DeepSeek-R1

-

Open your VM's firewall settings.

-

Allow port 3000 for your IP address (or leave it open to all IPs, though this is less secure and not recommended). For instructions, see here.

-

Visit http://[public-ip]:3000 in your browser. For example: http://198.145.126.7:3000

-

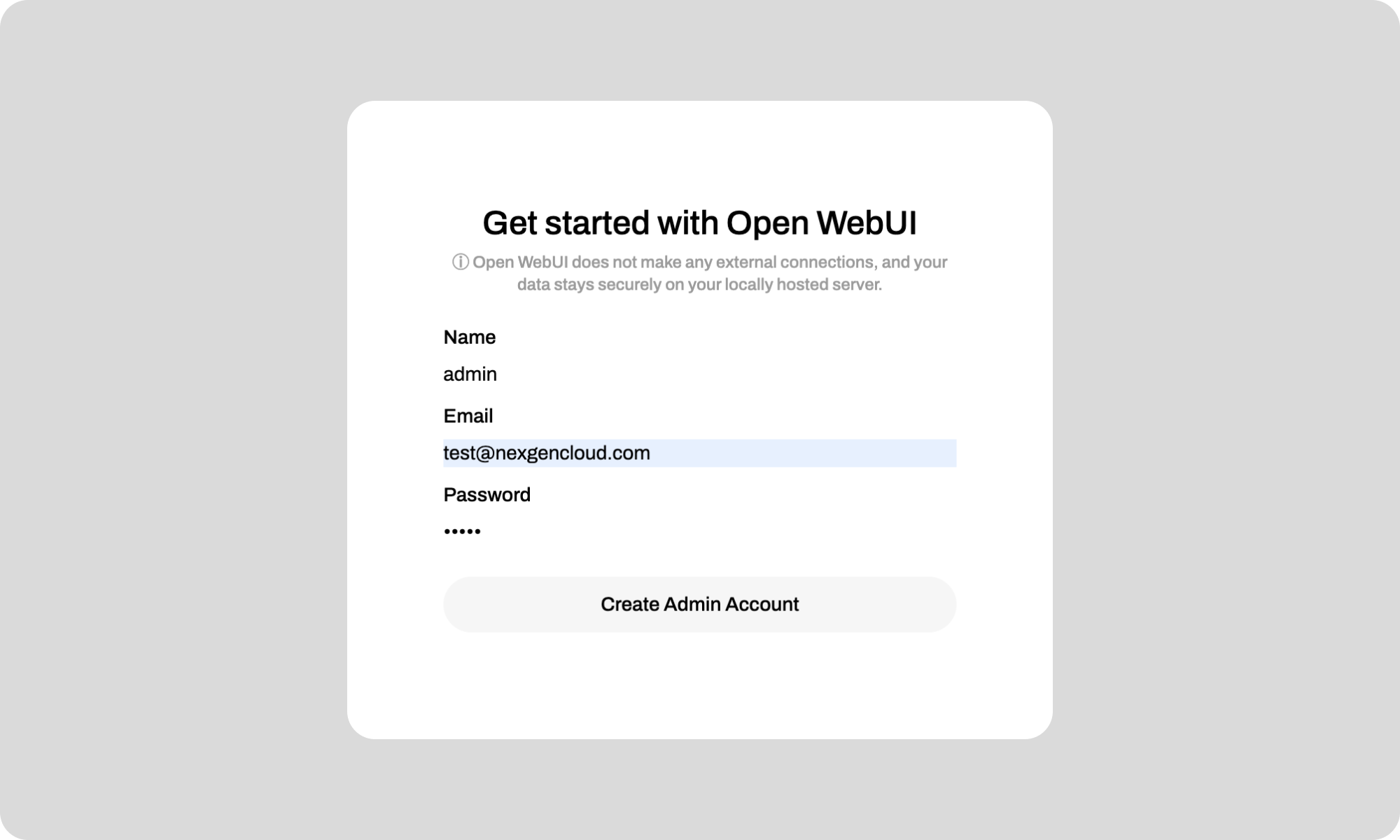

Set up an admin account for OpenWebUI and save your username and password for future logins. See the attached screenshot.

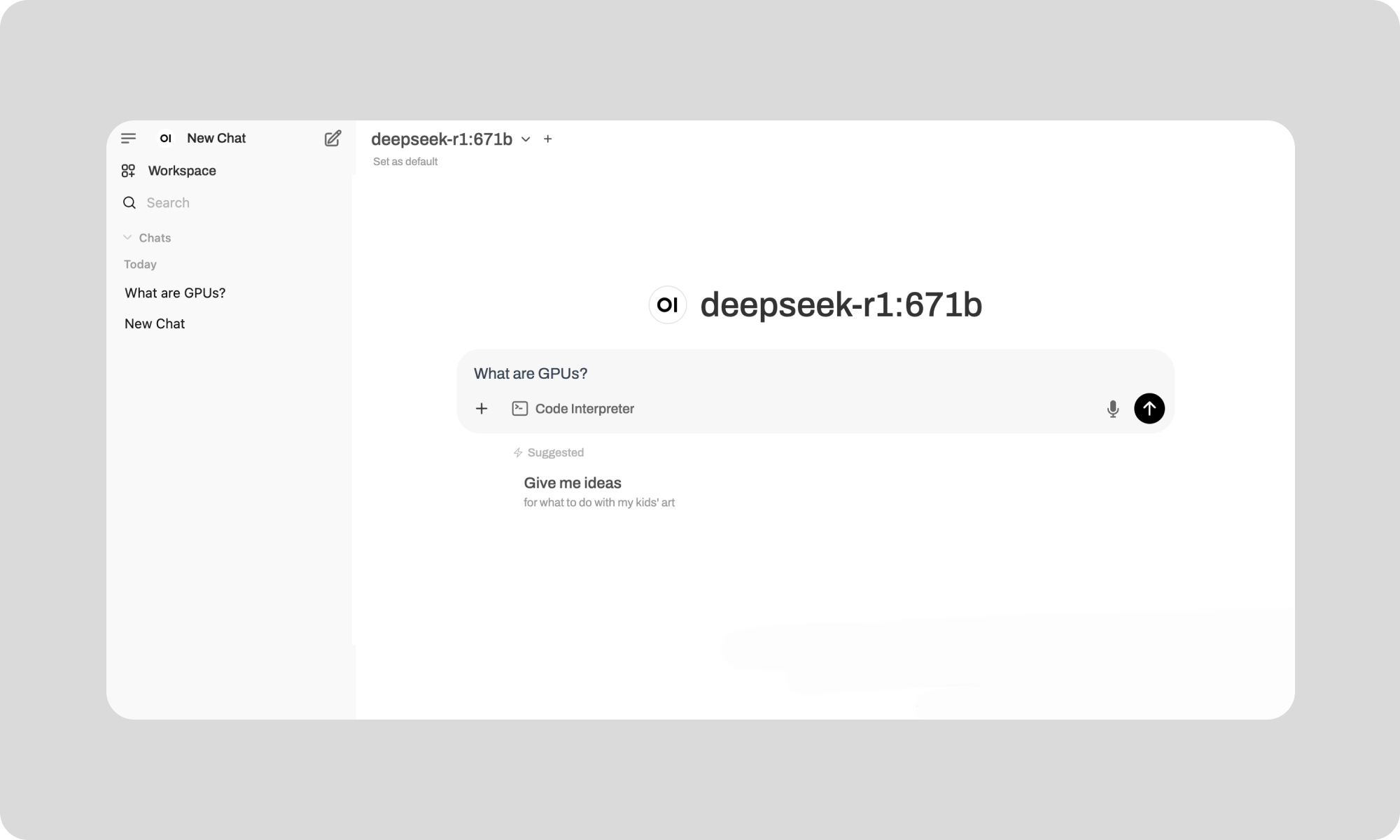

And voila, you can start talking to your self-hosted DeepSeek R1! See an example below.

Please note: for longer conversations, Ollama might run into errors. To fix these, refer below for instructions on increasing the context size. For more info on the issue see here.

Increasing Context Size for DeepSeek-R1

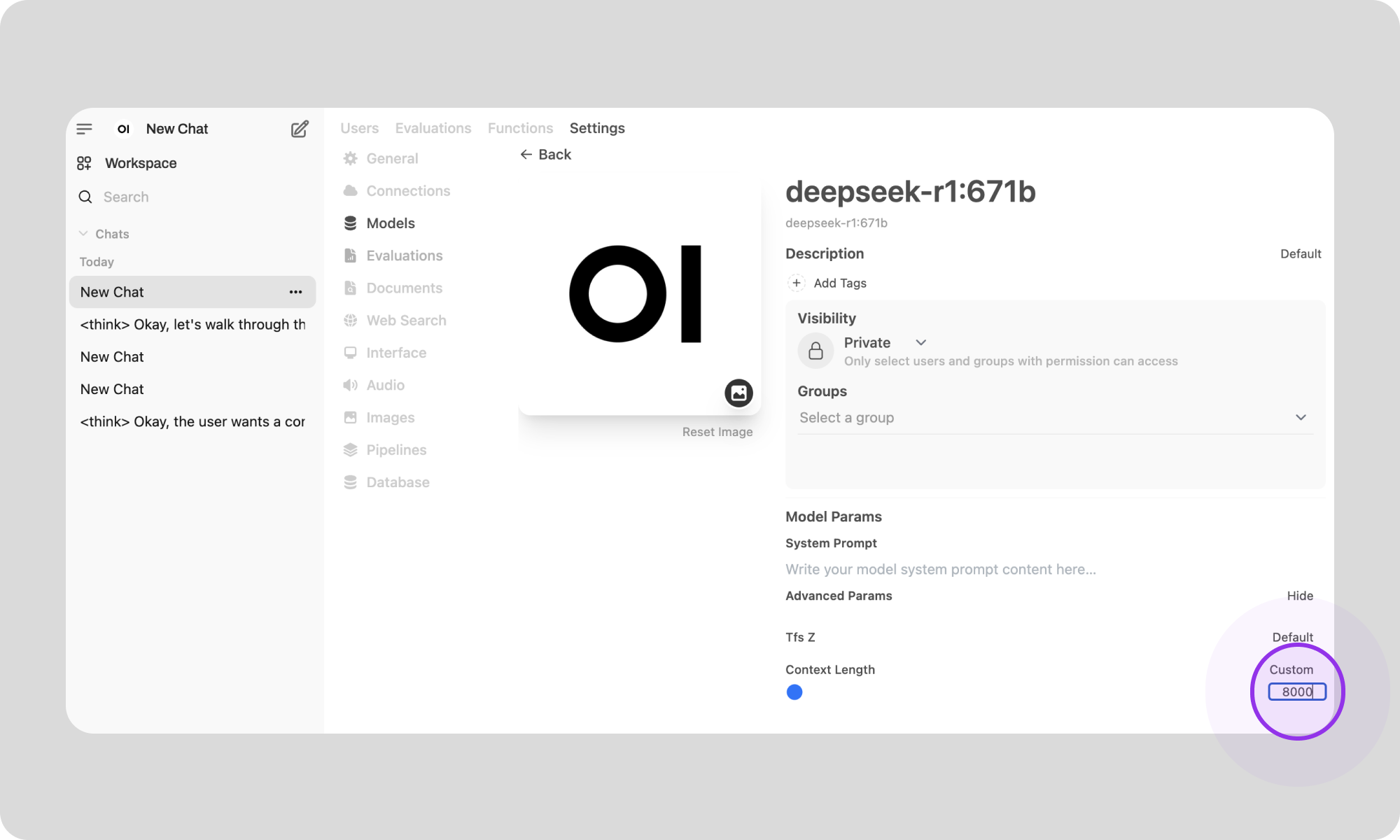

The model is initially set with a context size of 2048, which may be insufficient for longer input prompts or output text. To increase the context size, follow the steps below.

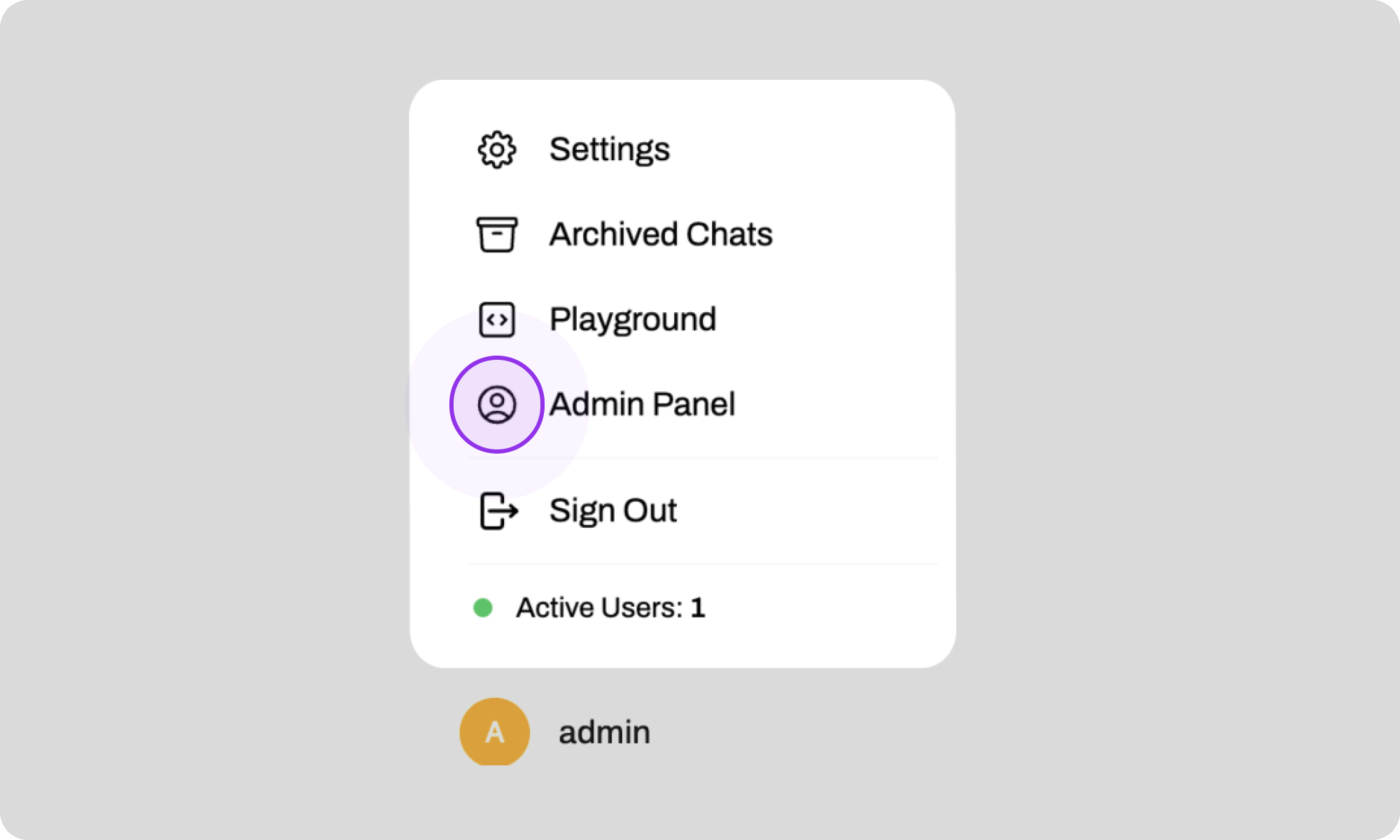

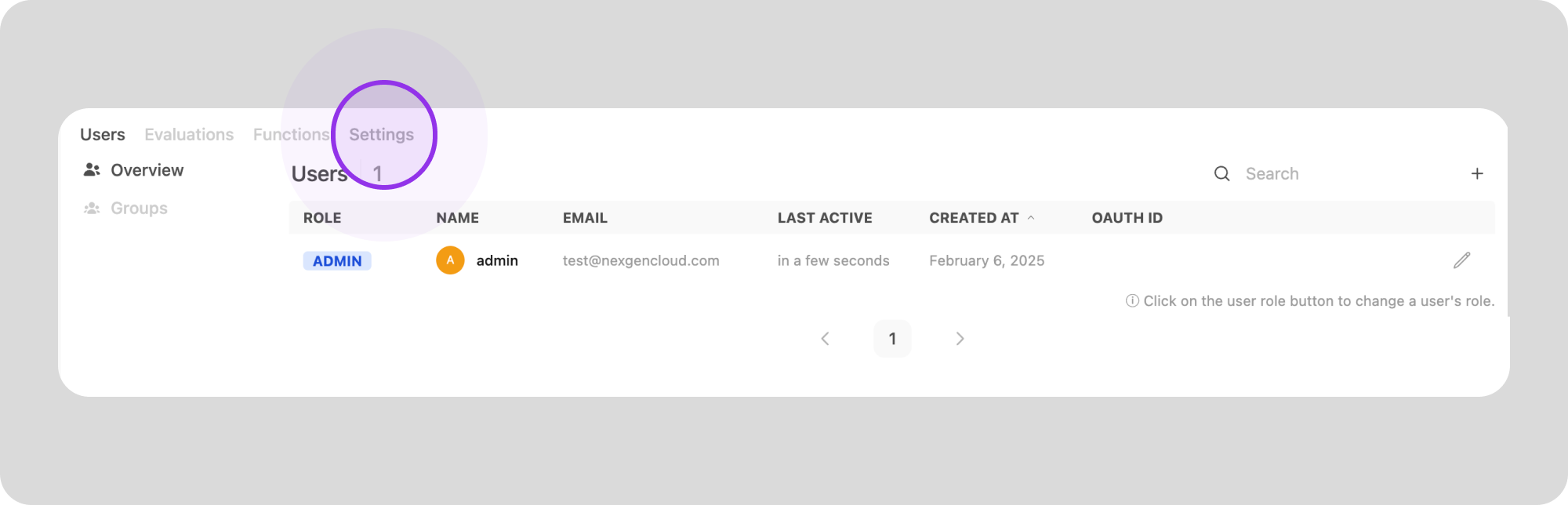

- Click on your username at the bottom left and then on 'Admin Panel'. See screenshot:

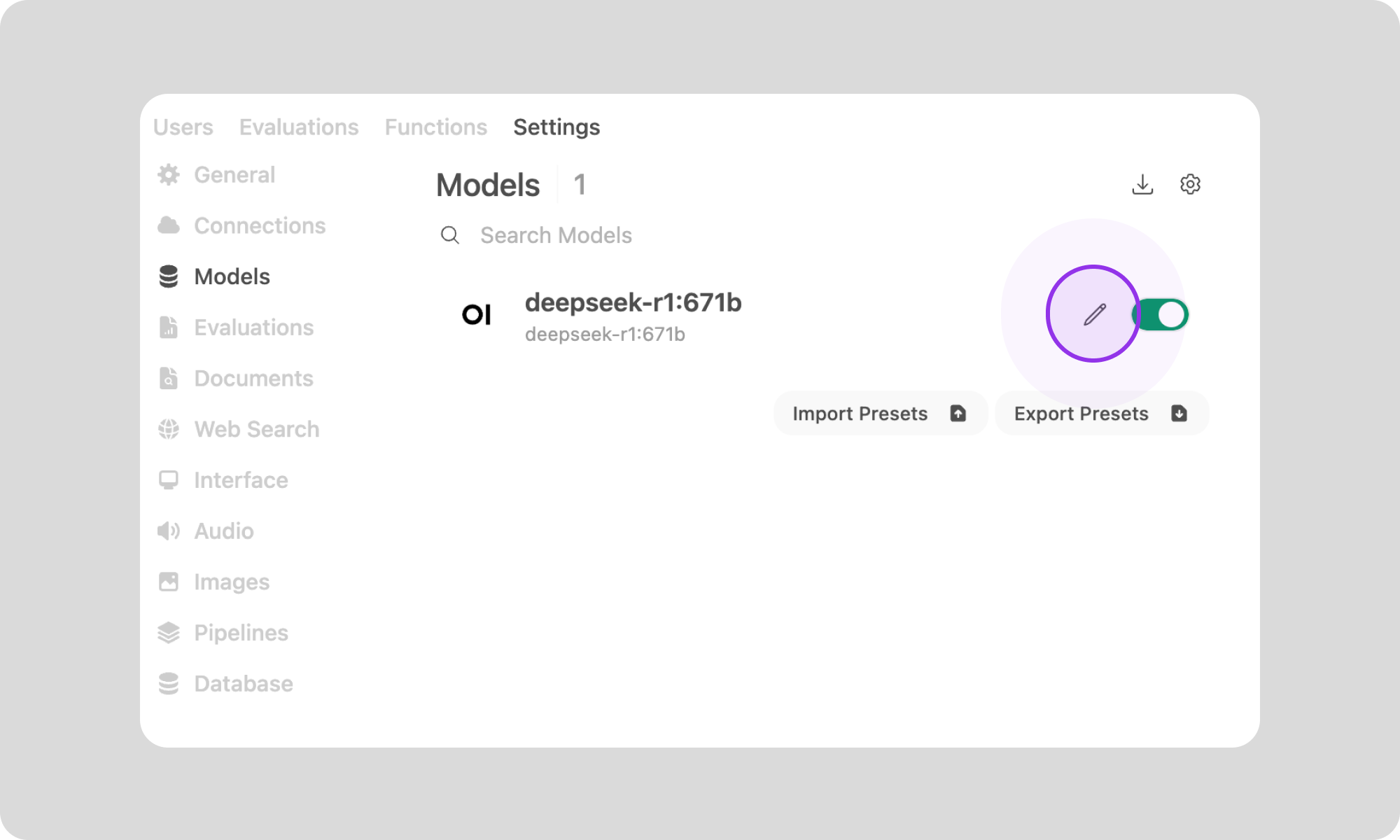

3. Click on 'Models' in the left sidebar. This will take you to an overview of all the models available on your machine.

4. Click on the Pencil icon right of 'DeepSeek-r1-671B'.

6. Click on 'Advanced params' to find the context length.7. You can now increase the 'Context Length' (e.g. 8192).

Congratulations, now you have DeepSeek-R1 running with a longer context size. This will allow you to have longer conversations and/or talk about more complex subjects.

Step 5: Hibernating Your VM

When you're finished with your current workload, you can hibernate your VM to avoid incurring unnecessary costs:

- In the Hyperstack dashboard, locate your Virtual machine.

- Look for a "Hibernate" option.

- Click to hibernate the VM, which will stop billing for compute resources while preserving your setup.

Why Deploy DeepSeek-R1 on Hyperstack?

Hyperstack is a cloud platform designed to accelerate AI and machine learning workloads. Here's why it's an excellent choice for deploying DeepSeek-R1:

- Availability: Hyperstack provides access to the latest and most powerful GPUs such as the NVIDIA H100 on-demand, specifically designed to handle large language models.

- Ease of Deployment: With pre-configured environments and one-click deployments, setting up complex AI models becomes significantly simpler on our platform.

- Scalability: You can easily scale your resources up or down based on your computational needs.

- Cost-Effectiveness: You pay only for the resources you use with our cost-effective cloud GPU pricing.

- Integration Capabilities: Hyperstack provides easy integration with popular AI frameworks and tools.

Related Blogs

-

Deploying and Using Qwen3 Next 80B A3B on Hyperstack — A Comprehensive Guide

-

Deploying and Using DeepSeek OCR on Hyperstack — A Step-by-Step Guide

-

How to Run Mistral Large 3 on Hyperstack — A Comprehensive Guide

FAQs

What is DeepSeek-R1?

DeepSeek-R1 is a 671B parameter open-source Mixture-of-Experts language model designed for high-performance logical reasoning and problem-solving.

What are the key features of DeepSeek-R1?

The key features of DeepSeek-R1 include:

- Advanced Reasoning Capabilities: DeepSeek-R1 excels in logical inference, mathematical reasoning, and real-time problem-solving, outperforming other models in tasks requiring structured thinking.

- Reinforcement Learning Training: The model employs reinforcement learning techniques, allowing it to develop advanced reasoning skills and generate logically sound responses.

- Open-Source: Released under the MIT license, DeepSeek-R1 is freely available for use, modification and redistribution.

- Distilled Model Variants: DeepSeek-R1 includes distilled versions with parameter counts ranging from 1.5B to 70B, catering to various computational requirements and use cases.

Are there smaller versions of DeepSeek-R1?

Yes, DeepSeek-R1 offers distilled model variants ranging from 1.5B to 70B parameters for different use cases.

How can I manage costs while using DeepSeek-R1 on Hyperstack?

You can use the "Hibernate" option on Hyperstack to pause your VM and reduce costs when not in use.

Can I increase the context size of DeepSeek-R1 on Hyperstack?

Yes, you can adjust the context size via the Open WebUI admin panel for longer input and output sequences.

Subscribe to Hyperstack!

Enter your email to get updates to your inbox every week

Get Started

Ready to build the next big thing in AI?