TABLE OF CONTENTS

In our latest article, we guide you through deploying DeepSeek-R1 1.58-Bit on a budget using Hyperstack. Thanks to Unsloth’s dynamic quantisation techniques, the model size is reduced from 720GB to just 131GB without sacrificing performance. We provide a step-by-step tutorial covering everything from setting up your Hyperstack VM with the recommended 4xL40 configuration to running DeepSeek-R1 via Open WebUI.

DeepSeek-R1 is making waves as a powerful open-source AI model with 671B parameters in logical reasoning and problem-solving. However, deploying it could be discouraging due to its hefty 400GB+ VRAM requirement for the int4 model. Well, not anymore!

The team at Unsloth has achieved an impressive 80% reduction in model size, bringing it down to just 131GB from the original 720GB using dynamic quantisation techniques. By selectively quantising certain layers without compromising performance, they’ve made running DeepSeek-R1 on a budget (See their work here).

In our latest tutorial, we provide a detailed step-by-step guide to host DeepSeek-R1 on a budget with Hyperstack.

Steps to Host DeepSeek-R1 1.58-Bit

Now, let's walk through the step-by-step process of deploying DeepSeek-R1 1.58 Bit on Hyperstack.

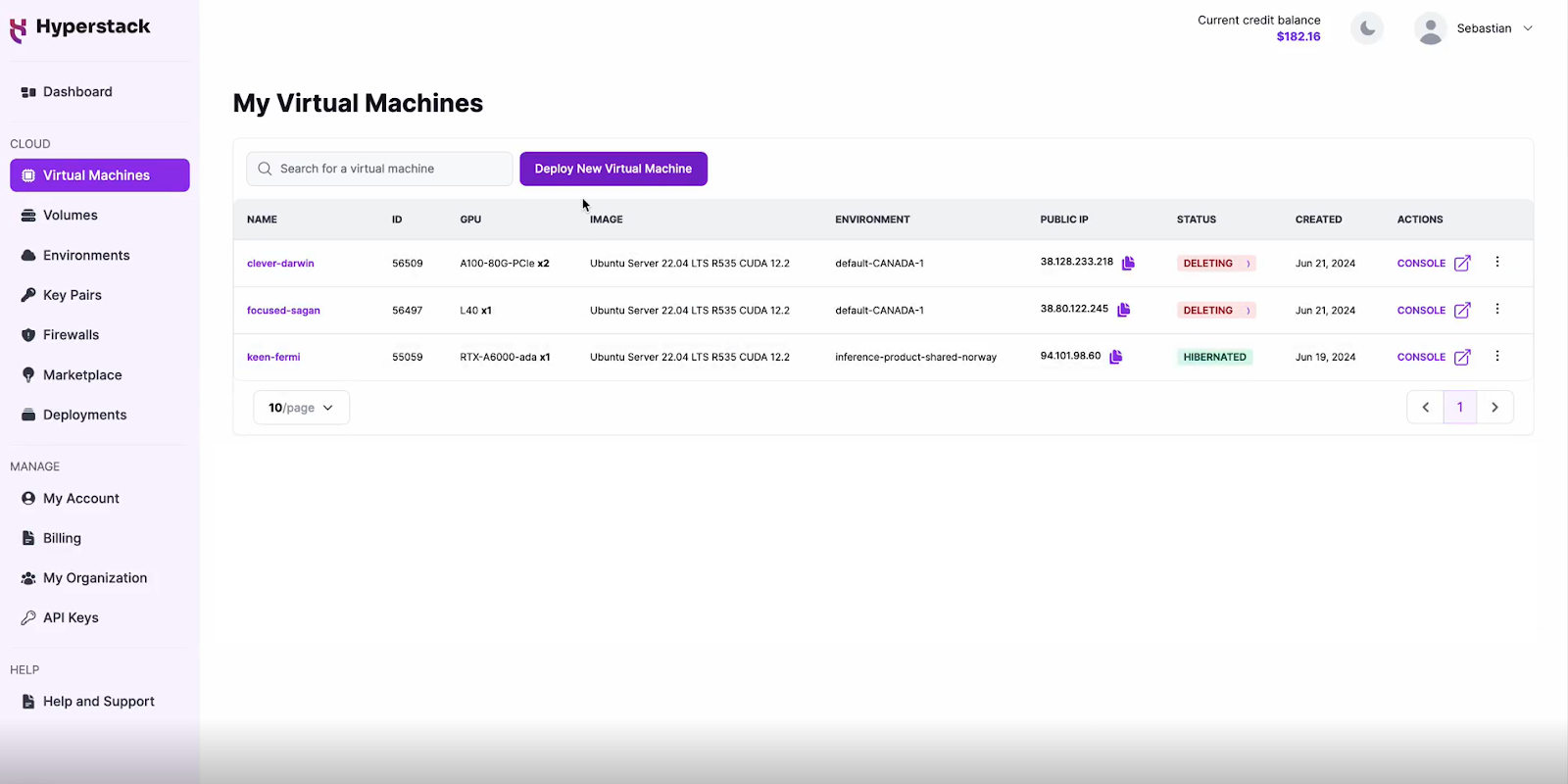

Step 1: Accessing Hyperstack

- Go to the Hyperstack website and log in to your account.

- If you're new to Hyperstack, you must create an account and set up your billing information. Check our documentation to get started with Hyperstack.

- Once logged in, you'll be greeted by the Hyperstack dashboard, which provides an overview of your resources and deployments.

Step 2: Deploying a New Virtual Machine

Initiate Deployment

- Look for the "Deploy New Virtual Machine" button on the dashboard.

- Click it to start the deployment process.

Select Hardware Configuration

- For DeepSeek-R1 1.58-Bit requirements, go to the hardware options and choose the "4xL40" flavour.

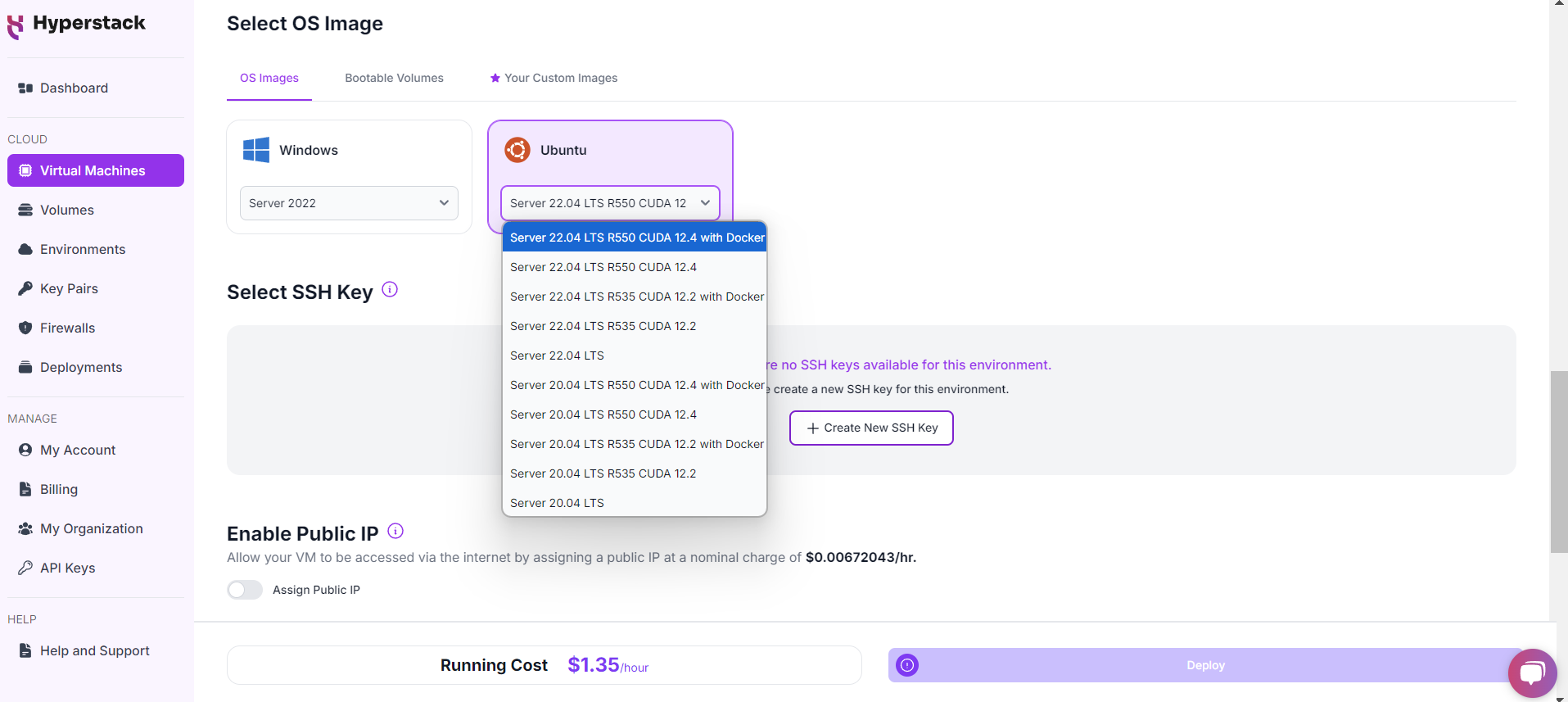

Choose the Operating System

- Select the "Ubuntu Server 22.04 LTS R550 CUDA 12.4 with Docker".

Select a keypair

- Select one of the keypairs in your account. Don't have a keypair yet? See our Getting Started tutorial for creating one.

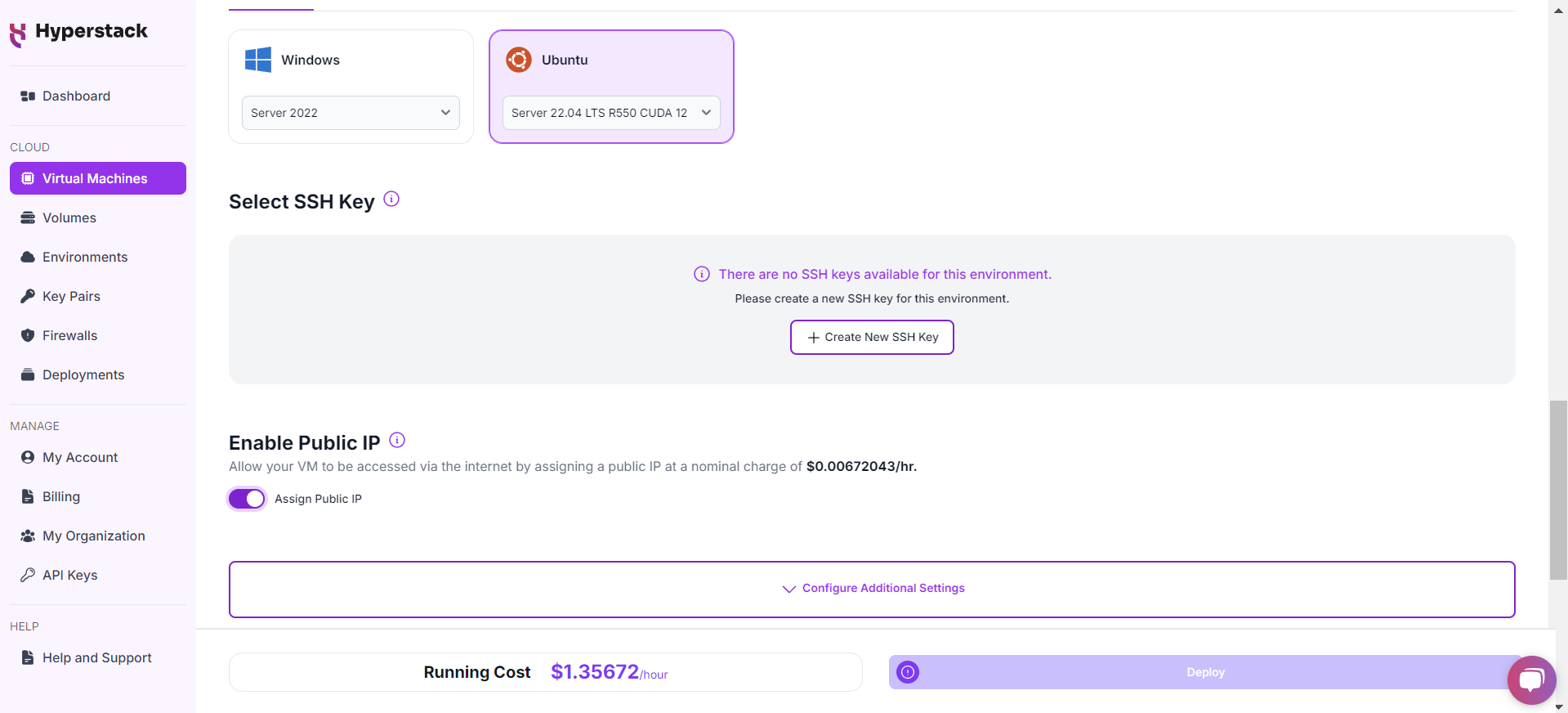

Network Configuration

- Ensure you assign a Public IP to your Virtual machine.

- This allows you to access your VM from the internet, which is crucial for remote management and API access.

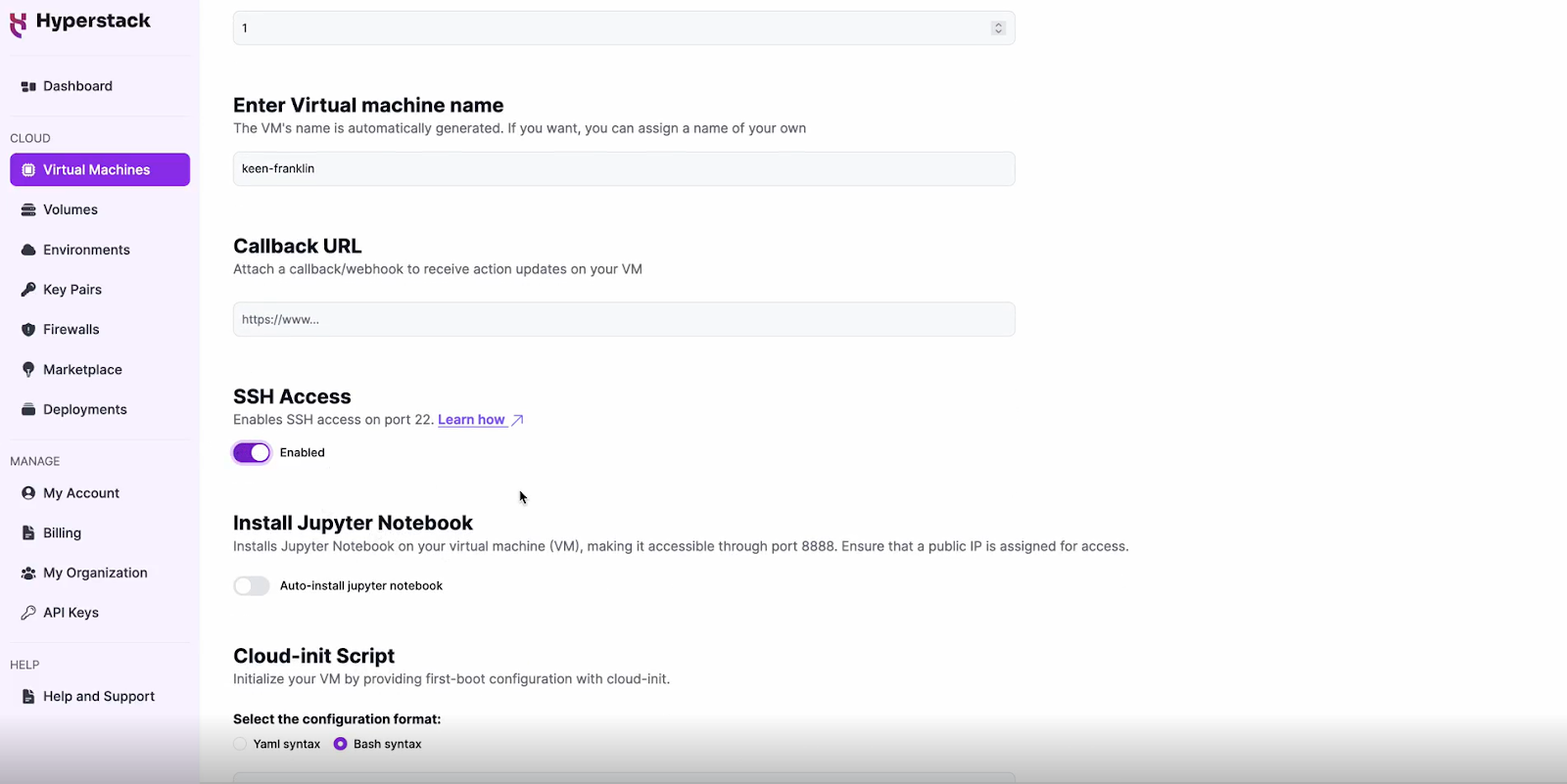

Enable SSH Access

- Make sure to enable an SSH connection.

- You'll need this to securely connect and manage your VM.

Review and Deploy

- Double-check all your settings.

- Click the "Deploy" button to launch your virtual machine.

Step 3: Accessing Your VM

Once the initialisation is complete, you can access your VM:

Locate SSH Details

- In the Hyperstack dashboard, find your VM's details.

- Look for the public IP address, which you will need to connect to your VM with SSH.

Connect via SSH

- Open a terminal on your local machine.

- Use the command ssh -i [path_to_ssh_key] [os_username]@[vm_ip_address] (e.g: ssh -i /users/username/downloads/keypair_hyperstack ubuntu@0.0.0.0.0)

- Replace username and ip_address with the details provided by Hyperstack.

Setting up DeepSeek-R1 1.58-Bit with Open WebUI

Follow the below steps to set up DeepSeek-R1 1.58-bit with Open WebUI:

-

Connect to your server via SSH.

- Run the following command to launch OpenWebUI on port 3000.

docker run -d \ -p "3000:8080" \ --gpus=all \ -v /ephemeral/ollama:/root/.ollama \ -v open-webui:/app/backend/data \ --name "open-webui" \ --restart always \ "ghcr.io/open-webui/open-webui:ollama" - Execute the commands below to get your system ready for DeepSeek 1.58-bit.

# Install Git LFS sudo apt-get install -y git-lfs # Download Nix for easy installation of llama-cpp (non-interactively) echo "Installing Nix..." sh <(curl -L https://nixos.org/nix/install) # Add Nix to the PATH. source ~/.nix-profile/etc/profile.d/nix.sh # Install llama-cpp. echo "Installing llama-cpp via nix..." nix profile install nixpkgs#llama-cpp --extra-experimental-features nix-command --extra-experimental-features flake - Run the provided commands to download and merge the model files. Merging is required since Ollama doesn’t support multi-file GGUF models (see more info here).

# Download huggingface model. echo "Downloading the DeepSeek model..." cd /ephemeral/ sudo chown -R ubuntu:ubuntu /ephemeral/ git lfs install GIT_LFS_SKIP_SMUDGE=1 git clone https://huggingface.co/unsloth/DeepSeek-R1-GGUF cd DeepSeek-R1-GGUF git lfs fetch --include="DeepSeek-R1-UD-IQ1_S/DeepSeek-R1-UD-IQ1_S-*.gguf" git lfs checkout "DeepSeek-R1-UD-IQ1_S/DeepSeek-R1-UD-IQ1_S-00001-of-00003.gguf" git lfs checkout "DeepSeek-R1-UD-IQ1_S/DeepSeek-R1-UD-IQ1_S-00002-of-00003.gguf" git lfs checkout "DeepSeek-R1-UD-IQ1_S/DeepSeek-R1-UD-IQ1_S-00003-of-00003.gguf" # Merge model file parts. cd DeepSeek-R1-UD-IQ1_S echo "Merging model parts..." llama-gguf-split --merge DeepSeek-R1-UD-IQ1_S-00001-of-00003.gguf DeepSeek-R1-UD-IQ1_S-merged.gguf # Move the files to the directory that our OpenWebUI container can access mkdir -p /ephemeral/ollama/local_models mv DeepSeek-R1-UD-IQ1_S-merged.gguf /ephemeral/ollama/local_models/DeepSeek-R1-UD-IQ1_S-merged.gguf - Create a modelfile compatible with Ollama and run it inside the container.

# Write the Modelfile with model configuration. cat < /ephemeral/ollama/local_models/Modelfile FROM /root/.ollama/local_models/DeepSeek-R1-UD-IQ1_S-merged.gguf PARAMETER num_ctx 4096 EOF

echo "Creating model DeepSeek-R1-GGUF-IQ1_S inside the Docker container..." docker exec -it open-webui ollama create DeepSeek-R1-GGUF-IQ1_S -f /root/.ollama/local_models/Modelfile

Please note: For long conversations, Ollama may show errors. Fix this by increasing the context size (num_ctx 4096) above to a higher value. For more info on the issue see here.

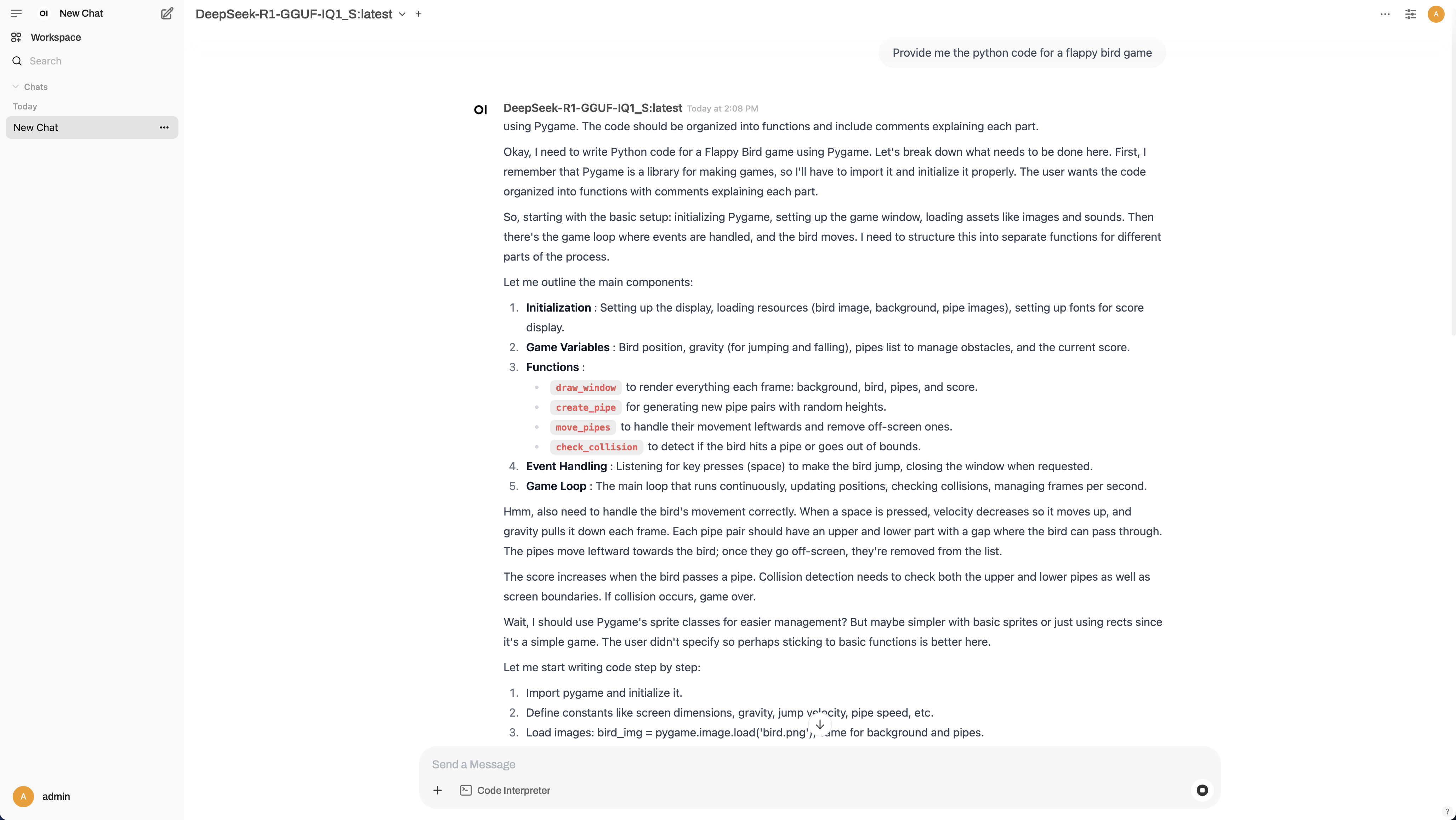

Interacting with DeepSeek-R1 1.58-Bit

To start interacting with your DeepSeek-R1 1.58-Bit, follow the below steps:

-

Access Your VM Firewall Settings

-

Enable traffic on port 3000 for your specific IP address. (You can leave this open to all IPs for broader access, but this is less secure and not recommended.) For instructions on changing firewall settings, see here.

-

In your browser, go to http://[public-ip]:3000. For example: http://128.2.1.1:3000

-

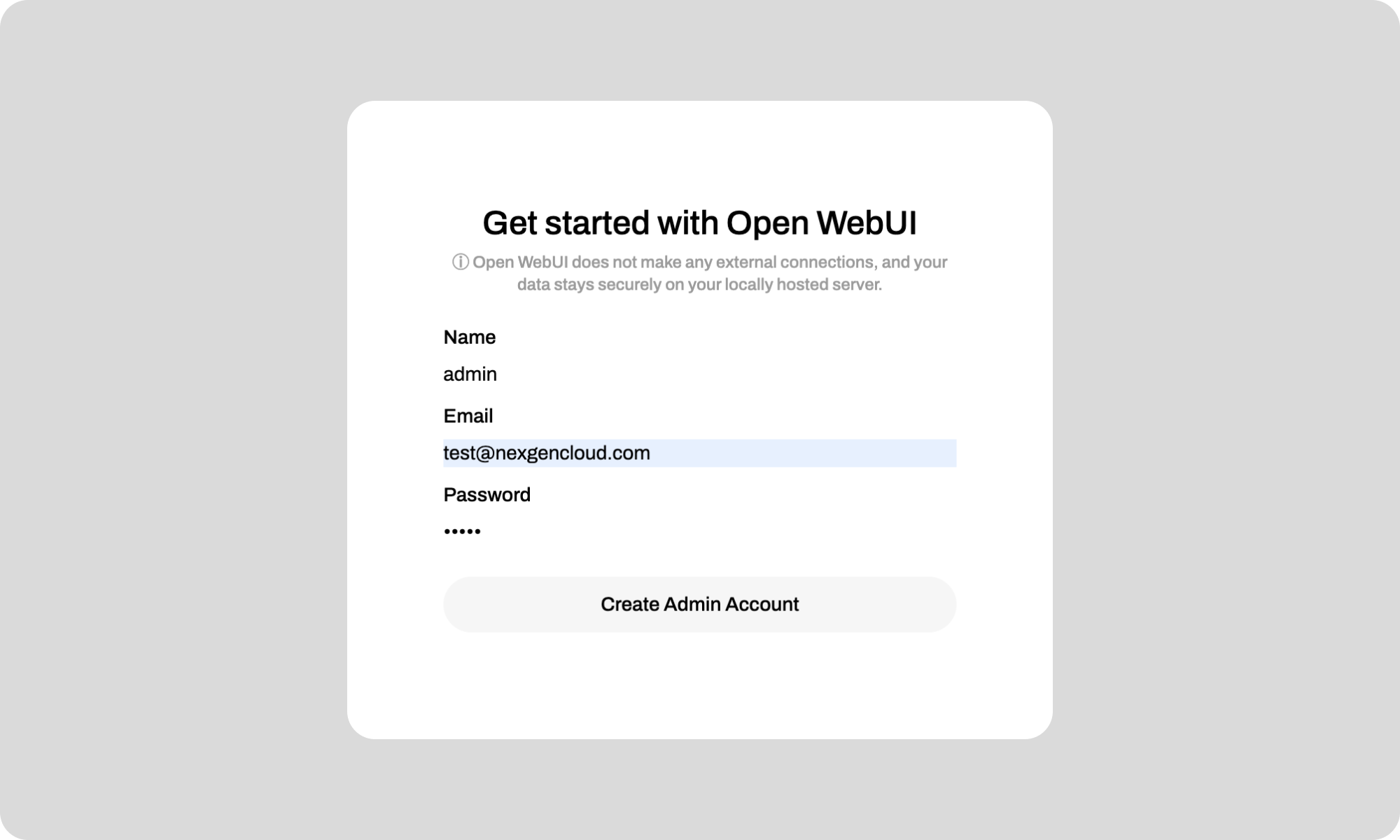

Set up an admin account for OpenWebUI. Be sure to save your username and password.

5. You’re all set to interact with your self-hosted DeepSeek model.

Disclaimer: Please note that this is a highly quantised version of DeepSeek and output may be unstable.

Step 5: Hibernating Your VM

When you're finished with your current workload, you can hibernate your VM to avoid incurring unnecessary costs:

- In the Hyperstack dashboard, locate your Virtual machine.

- Look for a "Hibernate" option.

- Click to hibernate the VM, which will stop billing for compute resources while preserving your setup.

Why Deploy DeepSeek-R1 on Hyperstack?

Hyperstack is a cloud platform designed to accelerate AI and machine learning workloads. Here's why it's an excellent choice for deploying DeepSeek-R1 1.58-Bit:

- Availability: Hyperstack provides access to the latest and most powerful GPUs such as the NVIDIA H100 PCIe on-demand, specifically designed to handle large language models.

- Ease of Deployment: With pre-configured environments and one-click deployments, setting up complex AI models becomes significantly simpler on our platform.

- Scalability: You can easily scale your resources up or down based on your computational needs.

- Cost-Effectiveness: You pay only for the resources you use with our cost-effective cloud GPU pricing.

- Integration Capabilities: Hyperstack provides easy integration with popular AI frameworks and tools.

FAQs

What is DeepSeek-R1?

DeepSeek-R1 is a 671B parameter, open-source Mixture-of-Experts language model designed for superior logical reasoning, mathematical problem-solving and structured thinking tasks, offering high performance in complex inference and decision-making scenarios.

What are the key features of DeepSeek-R1?

DeepSeek-R1 offers advanced reasoning abilities, reinforcement learning-based training, and open-source accessibility under the MIT license. It includes distilled model variants from 1.5B to 70B parameters.

What is DeepSeek-R1 1.58 Bit?

DeepSeek-R1 is a quantised version of the original DeepSeek-R1 671-billion parameter model.

How do I get started with Hyperstack to host DeepSeek-R1?

Sign up at https://console.hyperstack.cloud to get started with Hyperstack.

Which GPU is best for hosting DeepSeek-R1 1.58-bit on Hyperstack?

For hosting DeepSeek-R1 1.58-bit on Hyperstack, you can opt for the NVIDIA L40 GPU. Our NVIDIA L40 GPU is available for $ 1.00 per Hour.

Explore our tutorials on Deploying and Using Llama 3.2 and Llama 3.1

on Hyperstack.

Subscribe to Hyperstack!

Enter your email to get updates to your inbox every week

Get Started

Ready to build the next big thing in AI?